17.10.2025

MCML at ICCV 2025: 19 Accepted Papers (16 Main, and 3 Workshops)

IEEE/CVF International Conference on Computer Vision (ICCV 2025). Honolulu, Hawaii, 19.10.2025–23.10.2025

We are happy to announce that MCML researchers have contributed a total of 19 papers to ICCV 2025: 16 Main, and 3 Workshop papers. Congrats to our researchers!

Main Track (16 papers)

SUB: Benchmarking CBM Generalization via Synthetic Attribute Substitutions.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv URL

Abstract

Concept Bottleneck Models (CBMs) and other concept-based interpretable models show great promise for making AI applications more transparent, which is essential in fields like medicine. Despite their success, we demonstrate that CBMs struggle to reliably identify the correct concepts under distribution shifts. To assess the robustness of CBMs to concept variations, we introduce SUB: a fine-grained image and concept benchmark containing 38,400 synthetic images based on the CUB dataset. To create SUB, we select a CUB subset of 33 bird classes and 45 concepts to generate images which substitute a specific concept, such as wing color or belly pattern. We introduce a novel Tied Diffusion Guidance (TDG) method to precisely control generated images, where noise sharing for two parallel denoising processes ensures that both the correct bird class and the correct attribute are generated. This novel benchmark enables rigorous evaluation of CBMs and similar interpretable models, contributing to the development of more robust methods.

MCML Authors

Forecasting Continuous Non-Conservative Dynamical Systems in SO(3).

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. Oral Presentation. arXiv

Abstract

tbd

MCML Authors

What If: Understanding Motion Through Sparse Interactions.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published.

Abstract

Understanding the dynamics of a physical scene involves reasoning about the diverse ways it can potentially change, especially as a result of local interactions. We present the Flow Poke Transformer (FPT), a novel framework for directly predicting the distribution of local motion, conditioned on sparse interactions termed ‘pokes’. Unlike traditional methods that typically only enable dense sampling of a single realization of scene dynamics, FPT provides an interpretable directly accessible representation of multi-modal scene motion, its dependency on physical interactions and the inherent uncertainties of scene dynamics. We also evaluate our model on several downstream tasks to enable comparisons with prior methods and highlight the flexibility of our approach. On dense face motion generation, our generic pre-trained model surpasses specialized baselines. FPT can be fine-tuned in strongly out-of-distribution tasks such as synthetic datasets to enable significant improvements over in-domain methods in articulated object motion estimation. Additionally, predicting explicit motion distributions directly enables our method to achieve competitive performance on tasks like moving part segmentation from pokes which further demonstrates the versatility of our FPT.

MCML Authors

Back on Track: Bundle Adjustment for Dynamic Scene Reconstruction.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv

Abstract

Traditional SLAM systems, which rely on bundle adjustment, struggle with highly dynamic scenes commonly found in casual videos. Such videos entangle the motion of dynamic elements, undermining the assumption of static environments required by traditional systems. Existing techniques either filter out dynamic elements or model their motion independently. However, the former often results in incomplete reconstructions, whereas the latter can lead to inconsistent motion estimates. Taking a novel approach, this work leverages a 3D point tracker to separate the camera-induced motion from the observed motion of dynamic objects. By considering only the camera-induced component, bundle adjustment can operate reliably on all scene elements as a result. We further ensure depth consistency across video frames with lightweight post-processing based on scale maps. Our framework combines the core of traditional SLAM – bundle adjustment – with a robust learning-based 3D tracker front-end. Integrating motion decomposition, bundle adjustment and depth refinement, our unified framework, BA-Track, accurately tracks the camera motion and produces temporally coherent and scale-consistent dense reconstructions, accommodating both static and dynamic elements. Our experiments on challenging datasets reveal significant improvements in camera pose estimation and 3D reconstruction accuracy.

MCML Authors

RayPose: Ray Bundling Diffusion for Template Views in Unseen 6D Object Pose Estimation.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. GitHub

Abstract

Typical template-based object pose pipelines estimate the pose by retrieving the closest matching template and aligning it with the observed image. However, failure to retrieve the correct template often leads to inaccurate pose predictions. To address this, we reformulate template-based object pose estimation as a ray alignment problem, where the viewing directions from multiple posed template images are learned to align with a non-posed query image. Inspired by recent progress in diffusion-based camera pose estimation, we embed this formulation into a diffusion transformer architecture that aligns a query image with a set of posed templates. We reparameterize object rotation using object-centered camera rays and model object translation by extending scale-invariant translation estimation to dense translation offsets. Our model leverages geometric priors from the templates to guide accurate query pose inference. A coarse-to-fine training strategy based on narrowed template sampling improves performance without modifying the network architecture. Extensive experiments across multiple benchmark datasets show competitive results of our method compared to state-of-the-art approaches in unseen object pose estimation.

MCML Authors

Feed-Forward SceneDINO for Unsupervised Semantic Scene Completion.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv GitHub

Abstract

Semantic scene completion (SSC) aims to infer both the 3D geometry and semantics of a scene from single images. In contrast to prior work on SSC that heavily relies on expensive ground-truth annotations, we approach SSC in an unsupervised setting. Our novel method, SceneDINO, adapts techniques from self-supervised representation learning and 2D unsupervised scene understanding to SSC. Our training exclusively utilizes multi-view consistency self-supervision without any form of semantic or geometric ground truth. Given a single input image, SceneDINO infers the 3D geometry and expressive 3D DINO features in a feed-forward manner. Through a novel 3D feature distillation approach, we obtain unsupervised 3D semantics. In both 3D and 2D unsupervised scene understanding, SceneDINO reaches state-of-the-art segmentation accuracy. Linear probing our 3D features matches the segmentation accuracy of a current supervised SSC approach. Additionally, we showcase the domain generalization and multi-view consistency of SceneDINO, taking the first steps towards a strong foundation for single image 3D scene understanding.

MCML Authors

Scalable Ranked Preference Optimization for Text-to-Image Generation.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv

Abstract

Direct Preference Optimization (DPO) has emerged as a powerful approach to align text-to-image (T2I) models with human feedback. Unfortunately, successful application of DPO to T2I models requires a huge amount of resources to collect and label large-scale datasets, e.g., millions of generated paired images annotated with human preferences. In addition, these human preference datasets can get outdated quickly as the rapid improvements of T2I models lead to higher quality images. In this work, we investigate a scalable approach for collecting large-scale and fully synthetic datasets for DPO training. Specifically, the preferences for paired images are generated using a pre-trained reward function, eliminating the need for involving humans in the annotation process, greatly improving the dataset collection efficiency. Moreover, we demonstrate that such datasets allow averaging predictions across multiple models and collecting ranked preferences as opposed to pairwise preferences. Furthermore, we introduce RankDPO to enhance DPO-based methods using the ranking feedback. Applying RankDPO on SDXL and SD3-Medium models with our synthetically generated preference dataset ‘Syn-Pic’ improves both prompt-following (on benchmarks like T2I-Compbench, GenEval, and DPG-Bench) and visual quality (through user studies). This pipeline presents a practical and scalable solution to develop better preference datasets to enhance the performance of text-to-image models.

MCML Authors

Learning Interpretable Queries for Explainable Image Classification with Information Pursuit.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv

Abstract

Information Pursuit (IP) is an explainable prediction algorithm that greedily selects a sequence of interpretable queries about the data in order of information gain, updating its posterior at each step based on observed query-answer pairs. The standard paradigm uses hand-crafted dictionaries of potential data queries curated by a domain expert or a large language model after a human prompt. However, in practice, hand-crafted dictionaries are limited by the expertise of the curator and the heuristics of prompt engineering. This paper introduces a novel approach: learning a dictionary of interpretable queries directly from the dataset. Our query dictionary learning problem is formulated as an optimization problem by augmenting IP’s variational formulation with learnable dictionary parameters. To formulate learnable and interpretable queries, we leverage the latent space of large vision and language models like CLIP. To solve the optimization problem, we propose a new query dictionary learning algorithm inspired by classical sparse dictionary learning. Our experiments demonstrate that learned dictionaries significantly outperform hand-crafted dictionaries generated with large language models.

MCML Authors

TREAD: Token Routing for Efficient Architecture-agnostic Diffusion Training.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv

Abstract

Diffusion models have emerged as the mainstream approach for visual generation. However, these models typically suffer from sample inefficiency and high training costs. Consequently, methods for efficient finetuning, inference and personalization were quickly adopted by the community. However, training these models in the first place remains very costly. While several recent approaches - including masking, distillation, and architectural modifications - have been proposed to improve training efficiency, each of these methods comes with a tradeoff: they achieve enhanced performance at the expense of increased computational cost or vice versa. In contrast, this work aims to improve training efficiency as well as generative performance at the same time through routes that act as a transport mechanism for randomly selected tokens from early layers to deeper layers of the model. Our method is not limited to the common transformer-based model - it can also be applied to state-space models and achieves this without architectural modifications or additional parameters. Finally, we show that TREAD reduces computational cost and simultaneously boosts model performance on the standard ImageNet-256 benchmark in class-conditional synthesis. Both of these benefits multiply to a convergence speedup of 14x at 400K training iterations compared to DiT and 37x compared to the best benchmark performance of DiT at 7M training iterations. Furthermore, we achieve a competitive FID of 2.09 in a guided and 3.93 in an unguided setting, which improves upon the DiT, without architectural changes.

MCML Authors

Stochastic Interpolants for Revealing Stylistic Flows across the History of Art.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published.

Abstract

tba

MCML Authors

SCFlow: Implicitly Learning Style and Content Disentanglement with Flow Models.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv

Abstract

Explicitly disentangling style and content in vision models remains challenging due to their semantic overlap and the subjectivity of human perception. Existing methods propose separation through generative or discriminative objectives, but they still face the inherent ambiguity of disentangling intertwined concepts. Instead, we ask: Can we bypass explicit disentanglement by learning to merge style and content invertibly, allowing separation to emerge naturally? We propose SCFlow, a flow-matching framework that learns bidirectional mappings between entangled and disentangled representations. Our approach is built upon three key insights: 1) Training solely to merge style and content, a well-defined task, enables invertible disentanglement without explicit supervision; 2) flow matching bridges on arbitrary distributions, avoiding the restrictive Gaussian priors of diffusion models and normalizing flows; and 3) a synthetic dataset of 510,000 samples (51 styles × 10,000 content samples) was curated to simulate disentanglement through systematic style-content pairing. Beyond controllable generation tasks, we demonstrate that SCFlow generalizes to ImageNet-1k and WikiArt in zero-shot settings and achieves competitive performance, highlighting that disentanglement naturally emerges from the invertible merging process.

MCML Authors

How To Make Your Cell Tracker Say 'I dunno!'.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv

Abstract

Cell tracking is a key computational task in live-cell microscopy, but fully automated analysis of high-throughput imaging requires reliable and, thus, uncertainty-aware data analysis tools, as the amount of data recorded within a single experiment exceeds what humans are able to overlook. We here propose and benchmark various methods to reason about and quantify uncertainty in linear assignment-based cell tracking algorithms. Our methods take inspiration from statistics and machine learning, leveraging two perspectives on the cell tracking problem explored throughout this work: Considering it as a Bayesian inference problem and as a classification problem. Our methods admit a framework-like character in that they equip any frame-to-frame tracking method with uncertainty quantification. We demonstrate this by applying it to various existing tracking algorithms including the recently presented Transformer-based trackers. We demonstrate empirically that our methods yield useful and well-calibrated tracking uncertainties.

MCML Authors

SIC: Similarity-Based Interpretable Image Classification with Neural Networks.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv GitHub

Abstract

The deployment of deep learning models in critical domains necessitates a balance between high accuracy and interpretability. We introduce SIC, an inherently interpretable neural network that provides local and global explanations of its decision-making process. Leveraging the concept of case-based reasoning, SIC extracts class-representative support vectors from training images, ensuring they capture relevant features while suppressing irrelevant ones. Classification decisions are made by calculating and aggregating similarity scores between these support vectors and the input’s latent feature vector. We employ B-Cos transformations, which align model weights with inputs, to yield coherent pixel-level explanations in addition to global explanations of case-based reasoning. We evaluate SIC on three tasks: fine-grained classification on Stanford Dogs and FunnyBirds, multi-label classification on Pascal VOC, and pathology detection on the RSNA dataset. Results indicate that SIC not only achieves competitive accuracy compared to state-of-the-art black-box and inherently interpretable models but also offers insightful explanations verified through practical evaluation on the FunnyBirds benchmark. Our theoretical analysis proves that these explanations fulfill established axioms for explanations. Our findings underscore SIC’s potential for applications where understanding model decisions is as critical as the decisions themselves.

MCML Authors

TrafficLoc: Localizing Traffic Surveillance Cameras in 3D Scenes.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv GitHub

Abstract

Cone Beam Computed Tomography (CBCT) is widely used in medical imaging. However, the limited number and intensity of X-ray projections make reconstruction an ill-posed problem with severe artifacts. NeRF-based methods have achieved great success in this task. However, they suffer from a local-global training mismatch between their two key components: the hash encoder and the neural network. Specifically, in each training step, only a subset of the hash encoder’s parameters is used (local sparse), whereas all parameters in the neural network participate (global dense). Consequently, hash features generated in each step are highly misaligned, as they come from different subsets of the hash encoder. These misalignments from different training steps are then fed into the neural network, causing repeated inconsistent global updates in training, which leads to unstable training, slower convergence, and degraded reconstruction quality. Aiming to alleviate the impact of this local-global optimization mismatch, we introduce a Normalized Hash Encoder, which enhances feature consistency and mitigates the mismatch. Additionally, we propose a Mapping Consistency Initialization(MCI) strategy that initializes the neural network before training by leveraging the global mapping property from a well-trained model. The initialized neural network exhibits improved stability during early training, enabling faster convergence and enhanced reconstruction performance. Our method is simple yet effective, requiring only a few lines of code while substantially improving training efficiency on 128 CT cases collected from 4 different datasets, covering 7 distinct anatomical regions.

MCML Authors

Yan Xia

Dr.

* Former Member

FB-Diff: Fourier Basis-guided Diffusion for Temporal Interpolation of 4D Medical Imaging.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv

Abstract

The temporal interpolation task for 4D medical imaging, plays a crucial role in clinical practice of respiratory motion modeling. Following the simplified linear-motion hypothesis, existing approaches adopt optical flow-based models to interpolate intermediate frames. However, realistic respiratory motions should be nonlinear and quasi-periodic with specific frequencies. Intuited by this property, we resolve the temporal interpolation task from the frequency perspective, and propose a Fourier basis-guided Diffusion model, termed FB-Diff. Specifically, due to the regular motion discipline of respiration, physiological motion priors are introduced to describe general characteristics of temporal data distributions. Then a Fourier motion operator is elaborately devised to extract Fourier bases by incorporating physiological motion priors and case-specific spectral information in the feature space of Variational Autoencoder. Well-learned Fourier bases can better simulate respiratory motions with motion patterns of specific frequencies. Conditioned on starting and ending frames, the diffusion model further leverages well-learned Fourier bases via the basis interaction operator, which promotes the temporal interpolation task in a generative manner. Extensive results demonstrate that FB-Diff achieves state-of-the-art (SOTA) perceptual performance with better temporal consistency while maintaining promising reconstruction metrics. Codes are available.

MCML Authors

VGGSounder: Audio-Visual Evaluations for Foundation Models.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published.

Abstract

The emergence of audio-visual foundation models underscores the importance of reliably assessing their multi-modal understanding. The classification dataset VGGSound is commonly used as a benchmark for evaluating audio-visual understanding. However, our analysis identifies several critical issues in VGGSound, including incomplete labelling, partially overlapping classes, and misaligned modalities. These flaws lead to distorted evaluations of auditory and visual capabilities. To address these limitations, we introduce VGGSounder, a comprehensively re-annotated, multi-label test set extending VGGSound that is specifically designed to evaluate audio-visual foundation models. VGGSounder features detailed modality annotations, enabling precise analyses of modality-specific performance and revealing previously unnoticed model limitations. VGGSounder offers a robust benchmark supporting the future development of audio-visual foundation models.

MCML Authors

Workshops (3 papers)

The Diashow Paradox: Stronger 3D-Aware Representations Emerge from Image Sets, Not Videos.

SP4V @ICCV 2025 - Structural Priors for Vision Workshop at the IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. URL

Abstract

Image-based vision foundation models (VFMs) have demonstrated surprising 3D geometric awareness, despite no explicit 3D supervision or pre-training on multi-view data. While image-based models are widely adopted across a range of downstream tasks, video-based models have so far remained on the sidelines of this success. In this work, we conduct a comparative study of image and video models on three tasks encapsulating 3D awareness: multi-view consistency, depth and surface normal estimation. To enable a fair and reproducible evaluation of both image and video models, we develop AnyProbe, a unified framework for probing network representations. The results of our study reveal a surprising conclusion, which we refer to as the diashow paradox. Specifically, video-based pre-training does not provide any consistent advantage on downstream tasks involving 3D understanding over image-based pre-training. We formulate two hypotheses to explain our observations, which underscore the need for high-quality video datasets and highlight the inherent complexity of video-based pre-training. AnyProbe will be publicly released to streamline evaluation of image- and video-based VFMs alike in a consistent fashion.

MCML Authors

Motion-Refined DINOSAUR for Unsupervised Multi-Object Discovery.

Workshop @ICCV 2025 - Workshop at the IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv GitHub

Abstract

Unsupervised multi-object discovery (MOD) aims to detect and localize distinct object instances in visual scenes without any form of human supervision. Recent approaches leverage object-centric learning (OCL) and motion cues from video to identify individual objects. However, these approaches use supervision to generate pseudo labels to train the OCL model. We address this limitation with MR-DINOSAUR – Motion-Refined DINOSAUR – a minimalistic unsupervised approach that extends the self-supervised pre-trained OCL model, DINOSAUR, to the task of unsupervised multi-object discovery. We generate high-quality unsupervised pseudo labels by retrieving video frames without camera motion for which we perform motion segmentation of unsupervised optical flow. We refine DINOSAUR’s slot representations using these pseudo labels and train a slot deactivation module to assign slots to foreground and background. Despite its conceptual simplicity, MR-DINOSAUR achieves strong multi-object discovery results on the TRI-PD and KITTI datasets, outperforming the previous state of the art despite being fully unsupervised.

MCML Authors

When and Where do Events Switch in Multi-Event Video Generation?

LongVid-Foundations @ICCV 2025 - 1st Workshop on Long Multi-Scene Video Foundations: Generation, Understanding and Evaluation at the IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. arXiv

MCML Authors

#research #top-tier-work #akata #cremers #koepke #kutyniok #navab #ommer #ruegamer #seidl #thuerey #wachinger

Related

16.10.2025

SIC: Making AI Image Classification Understandable

SIC by the team of Christian Wachinger at ICCV 2025: Transparent AI for intuitive, reliable, and interpretable medical image classification.

09.10.2025

Rethinking AI in Public Institutions - Balancing Prediction and Capacity

Unai Fischer Abaigar explores how AI can make public decisions fairer, smarter, and more effective.

08.10.2025

MCML-LAMARR Workshop at University of Bonn

MCML and Lamarr researchers met in Bonn to exchange ideas on NLP, LLM finetuning, and AI ethics.

08.10.2025

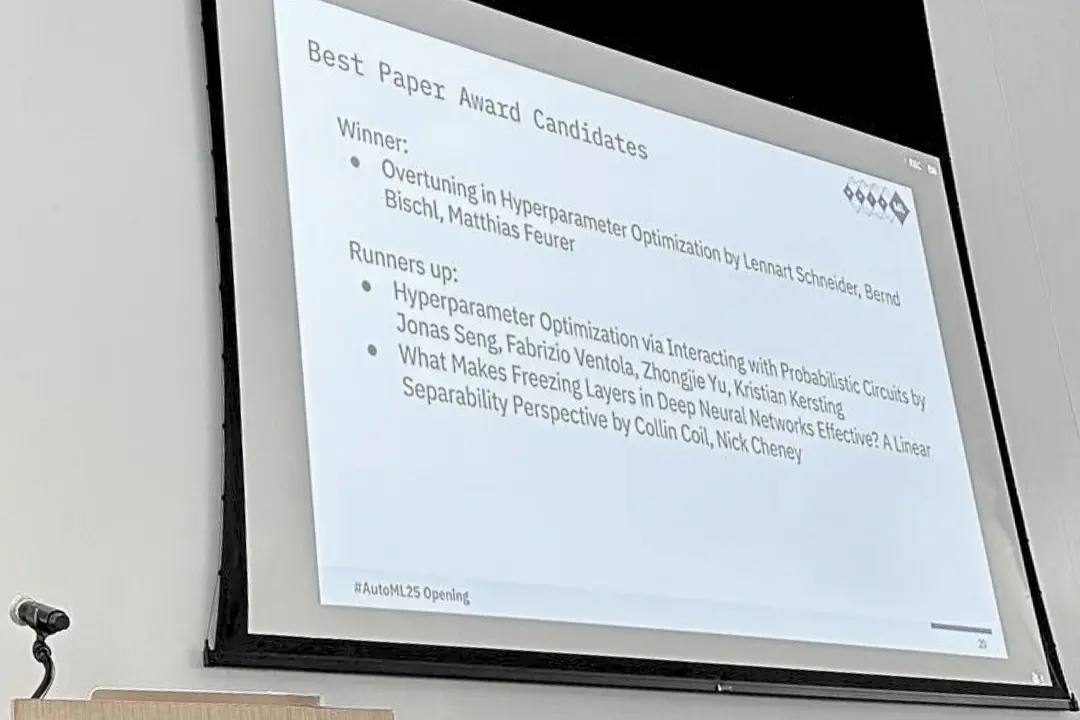

Three MCML Members Win Best Paper Award at AutoML 2025

Former MCML TBF Matthias Feurer and Director Bernd Bischl’s paper on overtuning won Best Paper at AutoML 2025, offering insights for robust HPO.