25.07.2025

MCML at ACL 2025: 37 Accepted Papers (17 Main, 8 Findings, and 12 Workshops)

63rd Annual Meeting of the Association for Computational Linguistics (ACL 2025). Vienna, Austria, 27.07.2025–01.08.2025

We are happy to announce that MCML researchers have contributed a total of 37 papers to ACL 2025: 17 Main, 8 Findings, and 12 Workshop papers. Congrats to our researchers!

Main Track (17 papers)

LLMs instead of Human Judges? A Large Scale Empirical Study across 20 NLP Evaluation Tasks.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

There is an increasing trend towards evaluating NLP models with LLM-generated judgments instead of human judgments. In the absence of a comparison against human data, this raises concerns about the validity of these evaluations; in case they are conducted with proprietary models, this also raises concerns over reproducibility. We provide JUDGE-BENCH, a collection of 20 NLP datasets with human annotations, and comprehensively evaluate 11 current LLMs, covering both open-weight and proprietary models, for their ability to replicate the annotations. Our evaluations show that each LLM exhibits a large variance across datasets in its correlation to human judgments. We conclude that LLMs are not yet ready to systematically replace human judges in NLP.

MCML Authors

LLaVA Steering: Visual Instruction Tuning with 500x Fewer Parameters through Modality Linear Representation-Steering.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Multimodal Large Language Models (MLLMs) have significantly advanced visual tasks by integrating visual representations into large language models (LLMs). The textual modality, inherited from LLMs, equips MLLMs with abilities like instruction following and in-context learning. In contrast, the visual modality enhances performance in downstream tasks by leveraging rich semantic content, spatial information, and grounding capabilities. These intrinsic modalities work synergistically across various visual tasks. Our research initially reveals a persistent imbalance between these modalities, with text often dominating output generation during visual instruction tuning. This imbalance occurs when using both full fine-tuning and parameter-efficient fine-tuning (PEFT) methods. We then found that re-balancing these modalities can significantly reduce the number of trainable parameters required, inspiring a direction for further optimizing visual instruction tuning. We introduce Modality Linear Representation-Steering (MoReS) to achieve the goal. MoReS effectively re-balances the intrinsic modalities throughout the model, where the key idea is to steer visual representations through linear transformations in the visual subspace across each model layer. To validate our solution, we composed LLaVA Steering, a suite of models integrated with the proposed MoReS method. Evaluation results show that the composed LLaVA Steering models require, on average, 500 times fewer trainable parameters than LoRA needs while still achieving comparable performance across three visual benchmarks and eight visual question-answering tasks. Last, we present the LLaVA Steering Factory, an in-house developed platform that enables researchers to quickly customize various MLLMs with component-based architecture for seamlessly integrating state-of-the-art models, and evaluate their intrinsic modality imbalance.

MCML Authors

Probing LLMs for Multilingual Discourse Generalization Through a Unified Label Set.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Discourse understanding is essential for many NLP tasks, yet most existing work remains constrained by framework-dependent discourse representations. This work investigates whether large language models (LLMs) capture discourse knowledge that generalizes across languages and frameworks. We address this question along two dimensions: (1) developing a unified discourse relation label set to facilitate cross-lingual and cross-framework discourse analysis, and (2) probing LLMs to assess whether they encode generalizable discourse abstractions. Using multilingual discourse relation classification as a testbed, we examine a comprehensive set of 23 LLMs of varying sizes and multilingual capabilities. Our results show that LLMs, especially those with multilingual training corpora, can generalize discourse information across languages and frameworks. Further layer-wise analyses reveal that language generalization at the discourse level is most salient in the intermediate layers. Lastly, our error analysis provides an account of challenging relation classes.

MCML Authors

Yang Janet Liu

* Former Member

Collapse of Dense Retrievers: Short, Early, and Literal Biases Outranking Factual Evidence.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Dense retrieval models are commonly used in Information Retrieval (IR) applications, such as Retrieval-Augmented Generation (RAG). Since they often serve as the first step in these systems, their robustness is critical to avoid failures. In this work, by repurposing a relation extraction dataset (e.g. Re-DocRED), we design controlled experiments to quantify the impact of heuristic biases, such as favoring shorter documents, in retrievers like Dragon+ and Contriever. Our findings reveal significant vulnerabilities: retrievers often rely on superficial patterns like over-prioritizing document beginnings, shorter documents, repeated entities, and literal matches. Additionally, they tend to overlook whether the document contains the query’s answer, lacking deep semantic understanding. Notably, when multiple biases combine, models exhibit catastrophic performance degradation, selecting the answer-containing document in less than 3% of cases over a biased document without the answer. Furthermore, we show that these biases have direct consequences for downstream applications like RAG, where retrieval-preferred documents can mislead LLMs, resulting in a 34% performance drop than not providing any documents at all.

MCML Authors

Multilingual Text-to-Image Generation Magnifies Gender Stereotypes and Prompt Engineering May Not Help You.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Text-to-image generation models have recently achieved astonishing results in image quality, flexibility, and text alignment, and are consequently employed in a fast-growing number of applications. Through improvements in multilingual abilities, a larger community now has access to this technology. However, our results show that multilingual models suffer from significant gender biases just as monolingual models do. Furthermore, the natural expectation that multilingual models will provide similar results across languages does not hold up. Instead, there are important differences between languages. We propose a novel benchmark, MAGBIG, intended to foster research on gender bias in multilingual models. We use MAGBIG to investigate the effect of multilingualism on gender bias in T2I models. To this end, we construct multilingual prompts requesting portraits of people with a certain occupation or trait. Our results show that not only do models exhibit strong gender biases but they also behave differently across languages. Furthermore, we investigate prompt engineering strategies, such as indirect, neutral formulations, to mitigate these biases. Unfortunately, these approaches have limited success and result in worse text-to-image alignment. Consequently, we call for more research into diverse representations across languages in image generators, as well as into steerability to address biased model behavior.

MCML Authors

What's the Difference? Supporting Users in Identifying the Effects of Prompt and Model Changes Through Token Patterns.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Prompt engineering for large language models is challenging, as even small prompt perturbations or model changes can significantly impact the generated output texts. Existing evaluation methods, either automated metrics or human evaluation, have limitations, such as providing limited insights or being labor-intensive. We propose Spotlight, a new approach that combines both automation and human analysis. Based on data mining techniques, we automatically distinguish between random (decoding) variations and systematic differences in language model outputs. This process provides token patterns that describe the systematic differences and guide the user in manually analyzing the effects of their prompt and model changes efficiently. We create three benchmarks to quantitatively test the reliability of token pattern extraction methods and demonstrate that our approach provides new insights into established prompt data. From a human-centric perspective, through demonstration studies and a user study, we show that our token pattern approach helps users understand the systematic differences of language model outputs, and we are able to discover relevant differences caused by prompt and model changes (e.g. related to gender or culture), thus supporting the prompt engineering process and human-centric model behavior research.

MCML Authors

Positional Overload: Positional Debiasing and Context Window Extension for Large Language Models using Set Encoding.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Large Language Models (LLMs) typically track the order of tokens using positional encoding, which causes the following problems: positional bias, where the model is influenced by an ordering within the prompt, and a fixed context window, as models struggle to generalize to positions beyond those encountered during training. To address these limitations, we developed a novel method called set encoding. This method allows multiple pieces of text to be encoded in the same position, thereby eliminating positional bias entirely. Another promising use case for set encoding is to increase the size of the input an LLM can handle. Our experiments demonstrate that set encoding allows an LLM to solve tasks with far more tokens than without set encoding. To our knowledge, set encoding is the first technique to effectively extend an LLM’s context window without requiring any additional training.

MCML Authors

Multimodal Pragmatic Jailbreak on Text-to-image Models.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL GitHub

Abstract

Diffusion models have recently achieved remarkable advancements in terms of image quality and fidelity to textual prompts. Concurrently, the safety of such generative models has become an area of growing concern. This work introduces a novel type of jailbreak, which triggers T2I models to generate the image with visual text, where the image and the text, although considered to be safe in isolation, combine to form unsafe content. To systematically explore this phenomenon, we propose a dataset to evaluate the current diffusion-based text-to-image (T2I) models under such jailbreak. We benchmark nine representative T2I models, including two closed-source commercial models. Experimental results reveal a concerning tendency to produce unsafe content: all tested models suffer from such type of jailbreak, with rates of unsafe generation ranging from around 10% to 70% where DALLE 3 demonstrates almost the highest unsafety. In real-world scenarios, various filters such as keyword blocklists, customized prompt filters, and NSFW image filters, are commonly employed to mitigate these risks. We evaluate the effectiveness of such filters against our jailbreak and found that, while these filters may be effective for single modality detection, they fail to work against our jailbreak. We also investigate the underlying reason for such jailbreaks, from the perspective of text rendering capability and training data. Our work provides a foundation for further development towards more secure and reliable T2I models.

MCML Authors

LangSAMP: Language-Script Aware Multilingual Pretraining.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL GitHub

Abstract

Recent multilingual pretrained language models (mPLMs) often avoid using language embeddings – learnable vectors assigned to different languages. These embeddings are discarded for two main reasons: (1) mPLMs are expected to have a single, unified parameter set across all languages, and (2) they need to function seamlessly as universal text encoders without requiring language IDs as input. However, this removal increases the burden on token embeddings to encode all language-specific information, which may hinder the model’s ability to produce more language-neutral representations. To address this challenge, we propose Language-Script Aware Multilingual Pretraining (LangSAMP), a method that incorporates both language and script embeddings to enhance representation learning while maintaining a simple architecture. Specifically, we integrate these embeddings into the output of the transformer blocks before passing the final representations to the language modeling head for prediction. We apply LangSAMP to the continual pretraining of XLM-R on a highly multilingual corpus covering more than 500 languages. The resulting model consistently outperforms the baseline. Extensive analysis further shows that language/script embeddings encode language/script-specific information, which improves the selection of source languages for crosslingual transfer.

MCML Authors

FocalPO: Enhancing Preference Optimizing by Focusing on Correct Preference Rankings.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Efficient preference optimization algorithms such as Direct Preference Optimization (DPO) have become a popular approach in aligning large language models (LLMs) with human preferences. These algorithms implicitly treat the LLM as a reward model, and focus on training it to correct misranked preference pairs. However, recent work~citep{chen2024preference} empirically finds that DPO training textit{rarely improves these misranked preference pairs}, despite its gradient emphasizing on these cases. We introduce FocalPO, a DPO variant that instead textit{down-weighs} misranked preference pairs and prioritizes enhancing the model’s understanding of pairs that it can already rank correctly. Inspired by Focal Loss used in vision tasks, FocalPO achieves this by adding a modulating factor to dynamically scale DPO loss. Our experiment demonstrates that FocalPO surpasses DPO and its variants on popular benchmarks like Alpaca Eval 2.0 using Mistral-Base-7B and Llama-3-Instruct-8B. Additionally, we empirically reveals how FocalPO affects training on correct and incorrect sample groups, further underscoring its effectiveness.

MCML Authors

Pragmatics in the Era of Large Language Models: A Survey on Datasets, Evaluation, Opportunities and Challenges.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Understanding pragmatics-the use of language in context-is crucial for developing NLP systems capable of interpreting nuanced language use. Despite recent advances in language technologies, including large language models, evaluating their ability to handle pragmatic phenomena such as implicatures and references remains challenging. To advance pragmatic abilities in models, it is essential to understand current evaluation trends and identify existing limitations. In this survey, we provide a comprehensive review of resources designed for evaluating pragmatic capabilities in NLP, categorizing datasets by the pragmatics phenomena they address. We analyze task designs, data collection methods, evaluation approaches, and their relevance to real-world applications. By examining these resources in the context of modern language models, we highlight emerging trends, challenges, and gaps in existing benchmarks. Our survey aims to clarify the landscape of pragmatic evaluation and guide the development of more comprehensive and targeted benchmarks, ultimately contributing to more nuanced and context-aware NLP models.

MCML Authors

Yang Janet Liu

* Former Member

Algorithmic Fidelity of Large Language Models in Generating Synthetic German Public Opinions: A Case Study.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

In recent research, large language models (LLMs) have been increasingly used to investigate public opinions. This study investigates the algorithmic fidelity of LLMs, i.e., the ability to replicate the socio-cultural context and nuanced opinions of human participants. Using open-ended survey data from the German Longitudinal Election Studies (GLES), we prompt different LLMs to generate synthetic public opinions reflective of German subpopulations by incorporating demographic features into the persona prompts. Our results show that Llama performs better than other LLMs at representing subpopulations, particularly when there is lower opinion diversity within those groups. Our findings further reveal that the LLM performs better for supporters of left-leaning parties like The Greens and The Left compared to other parties, and matches the least with the right-party AfD. Additionally, the inclusion or exclusion of specific variables in the prompts can significantly impact the models’ predictions. These findings underscore the importance of aligning LLMs to more effectively model diverse public opinions while minimizing political biases and enhancing robustness in representativeness.

MCML Authors

Circuit Compositions: Exploring Modular Structures in Transformer-Based Language Models.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

A fundamental question in interpretability research is to what extent neural networks, particularly language models, implement reusable functions via subnetworks that can be composed to perform more complex tasks. Recent developments in mechanistic interpretability have made progress in identifying subnetworks, often referred to as circuits, which represent the minimal computational subgraph responsible for a model’s behavior on specific tasks. However, most studies focus on identifying circuits for individual tasks without investigating how functionally similar circuits relate to each other. To address this gap, we examine the modularity of neural networks by analyzing circuits for highly compositional subtasks within a transformer-based language model. Specifically, given a probabilistic context-free grammar, we identify and compare circuits responsible for ten modular string-edit operations. Our results indicate that functionally similar circuits exhibit both notable node overlap and cross-task faithfulness. Moreover, we demonstrate that the circuits identified can be reused and combined through subnetwork set operations to represent more complex functional capabilities of the model.

MCML Authors

BMIKE-53: Investigating Cross-Lingual Knowledge Editing with In-Context Learning.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL GitHub

Abstract

Large language models (LLMs) possess extensive parametric knowledge, but this knowledge is difficult to update with new information because retraining is very expensive and infeasible for closed-source models. Knowledge editing (KE) has emerged as a viable solution for updating the knowledge of LLMs without compromising their overall performance. On-the-fly KE methods, inspired by in-context learning (ICL), have shown great promise and allow LLMs to be treated as black boxes. In the past, KE was primarily employed in English contexts, whereas the potential for cross-lingual KE in current English-centric LLMs has not been fully explored. To foster more research in this direction, we introduce the BMIKE-53 benchmark for evaluating cross-lingual KE on 53 diverse languages across three KE task types. We also propose a gradient-free KE method called Multilingual In-context Knowledge Editing (MIKE) and evaluate it on BMIKE-53. Our evaluation focuses on cross-lingual knowledge transfer in terms of reliability, generality, locality, and portability, offering valuable insights and a framework for future research in cross-lingual KE.

MCML Authors

Improving Parallel Sentence Mining for Low-Resource and Endangered Languages.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

While parallel sentence mining has been extensively covered for fairly well-resourced languages, pairs involving low-resource languages have received comparatively little attention.To address this gap, we present Belopsem, a benchmark of new datasets for parallel sentence mining on three language pairs where the source side is low-resource and endangered: Occitan-Spanish, Upper Sorbian-German, and Chuvash-Russian. These combinations also reflect varying linguistic similarity within each pair. We compare three language models in an established parallel sentence mining pipeline and apply two types of improvements to one of them, Glot500. We observe better mining quality overall by both applying alignment post-processing with an unsupervised aligner and using a cluster-based isotropy enhancement technique. These findings are crucial for optimising parallel data extraction for low-resource languages in a realistic way.

MCML Authors

Understanding In-Context Machine Translation for Low-Resource Languages: A Case Study on Manchu.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

In-context machine translation (MT) with large language models (LLMs) is a promising approach for low-resource MT, as it can readily take advantage of linguistic resources such as grammar books and dictionaries. Such resources are usually selectively integrated into the prompt so that LLMs can directly perform translation without any specific training, via their in-context learning capability (ICL). However, the relative importance of each type of resource e.g., dictionary, grammar book, and retrieved parallel examples, is not entirely clear. To address this gap, this study systematically investigates how each resource and its quality affects the translation performance, with the Manchu language as our case study. To remove any prior knowledge of Manchu encoded in the LLM parameters and single out the effect of ICL, we also experiment with an encrypted version of Manchu texts. Our results indicate that high-quality dictionaries and good parallel examples are very helpful, while grammars hardly help. In a follow-up study, we showcase a promising application of in-context MT: parallel data augmentation as a way to bootstrap the conventional MT model. When monolingual data abound, generating synthetic parallel data through in-context MT offers a pathway to mitigate data scarcity and build effective and efficient low-resource neural MT systems.

MCML Authors

Lost in Multilinguality: Dissecting Cross-lingual Factual Inconsistency in Transformer Language Models.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Multilingual language models (MLMs) store factual knowledge across languages but often struggle to provide consistent responses to semantically equivalent prompts in different languages. While previous studies point out this cross-lingual inconsistency issue, the underlying causes remain unexplored. In this work, we use mechanistic interpretability methods to investigate cross-lingual inconsistencies in MLMs. We find that MLMs encode knowledge in a language-independent concept space through most layers, and only transition to language-specific spaces in the final layers. Failures during the language transition often result in incorrect predictions in the target language, even when the answers are correct in other languages. To mitigate this inconsistency issue, we propose a linear shortcut method that bypasses computations in the final layers, enhancing both prediction accuracy and cross-lingual consistency. Our findings shed light on the internal mechanisms of MLMs and provide a lightweight, effective strategy for producing more consistent factual outputs.

MCML Authors

Findings Track (8 papers)

Analyzing the Effect of Linguistic Similarity on Cross-Lingual Transfer: Tasks and Experimental Setups Matter.

Findings @ACL 2025 - Findings of the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Cross-lingual transfer is a popular approach to increase the amount of training data for NLP tasks in a low-resource context. However, the best strategy to decide which cross-lingual data to include is unclear. Prior research often focuses on a small set of languages from a few language families and/or a single task. It is still an open question how these findings extend to a wider variety of languages and tasks. In this work, we analyze cross-lingual transfer for 263 languages from a wide variety of language families. Moreover, we include three popular NLP tasks: POS tagging, dependency parsing, and topic classification. Our findings indicate that the effect of linguistic similarity on transfer performance depends on a range of factors: the NLP task, the (mono- or multilingual) input representations, and the definition of linguistic similarity.

MCML Authors

A Rose by Any Other Name: LLM-Generated Explanations Are Good Proxies for Human Explanations to Collect Label Distributions on NLI.

Findings @ACL 2025 - Findings of the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Disagreement in human labeling is ubiquitous, and can be captured in human judgment distributions (HJDs). Recent research has shown that explanations provide valuable information for understanding human label variation (HLV) and large language models (LLMs) can approximate HJD from a few human-provided label-explanation pairs. However, collecting explanations for every label is still time-consuming. This paper examines whether LLMs can be used to replace humans in generating explanations for approximating HJD. Specifically, we use LLMs as annotators to generate model explanations for a few given human labels. We test ways to obtain and combine these label-explanations with the goal to approximate human judgment distribution. We further compare the resulting human with model-generated explanations, and test automatic and human explanation selection. Our experiments show that LLM explanations are promising for NLI: to estimate HJD, generated explanations yield comparable results to human’s when provided with human labels. Importantly, our results generalize from datasets with human explanations to i) datasets where they are not available and ii) challenging out-of-distribution test sets.

MCML Authors

EXECUTE: A Multilingual Benchmark for LLM Token Understanding.

Findings @ACL 2025 - Findings of the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

The CUTE benchmark showed that LLMs struggle with character understanding in English. We extend it to more languages with diverse scripts and writing systems, introducing EXECUTE. Our simplified framework allows easy expansion to any language. Tests across multiple LLMs reveal that challenges in other languages are not always on the character level as in English. Some languages show word-level processing issues, some show no issues at all. We also examine sub-character tasks in Chinese, Japanese, and Korean to assess LLMs’ understanding of character components.

MCML Authors

Time Course MechInterp: Analyzing the Evolution of Components and Knowledge in Large Language Models.

Findings @ACL 2025 - Findings of the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Understanding how large language models (LLMs) acquire and store factual knowledge is crucial for enhancing their interpretability and reliability. In this work, we analyze the evolution of factual knowledge representation in the OLMo-7B model by tracking the roles of its attention heads and feed forward networks (FFNs) over the course of pre-training. We classify these components into four roles: general, entity, relation-answer, and fact-answer specific, and examine their stability and transitions. Our results show that LLMs initially depend on broad, general-purpose components, which later specialize as training progresses. Once the model reliably predicts answers, some components are repurposed, suggesting an adaptive learning process. Notably, attention heads display the highest turnover. We also present evidence that FFNs remain more stable throughout training. Furthermore, our probing experiments reveal that location-based relations converge to high accuracy earlier in training than name-based relations, highlighting how task complexity shapes acquisition dynamics. These insights offer a mechanistic view of knowledge formation in LLMs.

MCML Authors

Large Language Models as Neurolinguistic Subjects: Discrepancy between Performance and Competence.

Findings @ACL 2025 - Findings of the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

This study investigates the linguistic understanding of Large Language Models (LLMs) regarding signifier (form) and signified (meaning) by distinguishing two LLM assessment paradigms: psycholinguistic and neurolinguistic. Traditional psycholinguistic evaluations often reflect statistical rules that may not accurately represent LLMs’ true linguistic competence. We introduce a neurolinguistic approach, utilizing a novel method that combines minimal pair and diagnostic probing to analyze activation patterns across model layers. This method allows for a detailed examination of how LLMs represent form and meaning, and whether these representations are consistent across languages. We found: (1) Psycholinguistic and neurolinguistic methods reveal that language performance and competence are distinct; (2) Direct probability measurement may not accurately assess linguistic competence; (3) Instruction tuning won’t change much competence but improve performance; (4) LLMs exhibit higher competence and performance in form compared to meaning. Additionally, we introduce new conceptual minimal pair datasets for Chinese (COMPS-ZH) and German (COMPS-DE), complementing existing English datasets.

MCML Authors

How Programming Concepts and Neurons Are Shared in Code Language Models.

Findings @ACL 2025 - Findings of the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL GitHub

Abstract

Several studies have explored the mechanisms of large language models (LLMs) in coding tasks, but most have focused on programming languages (PLs) in a monolingual setting. In this paper, we investigate the relationship between multiple PLs and English in the concept space of LLMs. We perform a few-shot translation task on 21 PL pairs using two Llama-based models. By decoding the embeddings of intermediate layers during this task, we observe that the concept space is closer to English (including PL keywords) and assigns high probabilities to English tokens in the second half of the intermediate layers. We analyze neuron activations for 11 PLs and English, finding that while language-specific neurons are primarily concentrated in the bottom layers, those exclusive to each PL tend to appear in the top layers. For PLs that are highly aligned with multiple other PLs, identifying language-specific neurons is not feasible. These PLs also tend to have a larger keyword set than other PLs and are closer to the model’s concept space regardless of the input/output PL in the translation task. Our findings provide insights into how LLMs internally represent PLs, revealing structural patterns in the model’s concept space.

MCML Authors

MEXA: Multilingual Evaluation of English-Centric LLMs via Cross-Lingual Alignment.

Findings @ACL 2025 - Findings of the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

English-centric large language models (LLMs) often show strong multilingual capabilities. However, the multilingual performance of these models remains unclear and is not thoroughly evaluated for many languages. Most benchmarks for multilinguality focus on classic NLP tasks, or cover a minimal number of languages. We introduce MEXA, a method for assessing the multilingual capabilities of pre-trained English-centric LLMs using parallel sentences, which are available for more languages than existing downstream tasks. MEXA leverages the fact that English-centric LLMs use English as a kind of pivot language in their intermediate layers. It computes the alignment between English and non-English languages using parallel sentences to evaluate the transfer of language understanding from English to other languages. This alignment can be used to estimate model performance in other languages. We conduct studies using various parallel datasets (FLORES-200 and Bible), models (Llama family, Gemma family, Mistral, and OLMo), and established downstream tasks (Belebele, m-MMLU, and m-ARC). We explore different methods to compute embeddings in decoder-only models. Our results show that MEXA, in its default settings, achieves a statistically significant average Pearson correlation of 0.90 with three established downstream tasks across nine models and two parallel datasets. This suggests that MEXA is a reliable method for estimating the multilingual capabilities of English-centric LLMs, providing a clearer understanding of their multilingual potential and the inner workings of LLMs.

MCML Authors

taz2024full: Analysing German Newspapers for Gender Bias and Discrimination across Decades.

Findings @ACL 2025 - Findings of the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Open-access corpora are essential for advancing natural language processing (NLP) and computational social science (CSS). However, large-scale resources for German remain limited, restricting research on linguistic trends and societal issues such as gender bias. We present taz2024full, the largest publicly available corpus of German newspaper articles to date, comprising over 1.8 million texts from taz, spanning 1980 to 2024. As a demonstration of the corpus’s utility for bias and discrimination research, we analyse gender representation across four decades of reporting. We find a consistent overrepresentation of men, but also a gradual shift toward more balanced coverage in recent years. Using a scalable, structured analysis pipeline, we provide a foundation for studying actor mentions, sentiment, and linguistic framing in German journalistic texts. The corpus supports a wide range of applications, from diachronic language analysis to critical media studies, and is freely available to foster inclusive and reproducible research in German-language NLP.

MCML Authors

Workshops (12 papers)

A Practical Tool to Help Automate Interlinear Glossing: a Study on Mukrī Kurdish.

Field Matters @ACL 2025 - 4th Workshop on NLP Applications to Field Linguistics at the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Interlinear gloss generation aims to predict linguistic annotations (gloss) for a sentence in a language that is usually under ongoing documentation. Such output is a first draft for the linguist to work with and should reduce the manual workload.This article studies a simple glossing pipeline based on a Conditional Random Field and applies it to a small fieldwork corpus in Mukrī Kurdish, a variety of Central Kurdish.We mainly focus on making the tool as accessible as possible for field linguists, so it can run on standard computers without the need for GPUs. Our pipeline predicts common grammatical patterns robustly and, more generally, frequent combinations of morphemes and glosses. Although more advanced neural models do reach better results, our feature-based system still manages to be competitive and to provide interpretability.To foster further collaboration between field linguistics and NLP, we also provide some recommendations regarding documentation endeavours and release our pipeline code alongside.

MCML Authors

Testing Spatial Intuitions of Humans and Large Language and Multimodal Models in Analogies.

Analogy-Angle II @ACL 2025 - 2nd Workshop on Analogical Abstraction in Cognition, Perception, and Language at the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Language and Vision-Language Models exhibit impressive language capabilities akin to human reasoning. However, unlike humans who acquire language through embodied, interactive experiences, these models learn from static datasets without real-world interaction. This difference raises questions about how they conceptualize abstract notions and whether their reasoning aligns with human cognition. We investigate spatial conceptualizations of LLMs and VLMs by conducting analogy prompting studies with LLMs, VLMs, and human participants. We assess their ability to generate and interpret analogies for spatial concepts. We quantitatively compare the analogies produced by each group, examining the impact of multimodal inputs and reasoning mechanisms. Our findings indicate that generative models can produce and interpret analogies but differ significantly from human reasoning in their abstraction of spatial concepts - variability influenced by input modality, model size, and prompting methods, with analogy-based prompts not consistently enhancing alignment. Contributions include a methodology for probing generative models through analogies; a comparative analysis of analogical reasoning among models, and humans; and insights into the effect of multimodal inputs on reasoning.

MCML Authors

Your Pretrained Model Tells the Difficulty Itself: A Self-Adaptive Curriculum Learning Paradigm for Natural Language Understanding.

SRW @ACL 2025 - Student Research Workshop at the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Curriculum learning is a widely adopted training strategy in natural language processing (NLP), where models are exposed to examples organized by increasing difficulty to enhance learning efficiency and performance. However, most existing approaches rely on manually defined difficulty metrics – such as text length – which may not accurately reflect the model’s own perspective. To overcome this limitation, we present a self-adaptive curriculum learning paradigm that prioritizes fine-tuning examples based on difficulty scores predicted by pre-trained language models (PLMs) themselves. Building on these scores, we explore various training strategies that differ in the ordering of examples for the fine-tuning: from easy-to-hard, hard-to-easy, to mixed sampling. We evaluate our method on four natural language understanding (NLU) datasets covering both binary and multi-class classification tasks. Experimental results show that our approach leads to faster convergence and improved performance compared to standard random sampling.

MCML Authors

Towards Better Open-Ended Text Generation: A Multicriteria Evaluation Framework.

GEM2 @ACL 2025 - 4th Workshop on Generation, Evaluation and Metrics at the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Open-ended text generation has become a prominent task in natural language processing due to the rise of powerful (large) language models. However, evaluating the quality of these models and the employed decoding strategies remains challenging because of trade-offs among widely used metrics such as coherence, diversity, and perplexity. Decoding methods often excel in some metrics while underperforming in others, complicating the establishment of a clear ranking. In this paper, we present novel ranking strategies within this multicriteria framework. Specifically, we employ benchmarking approaches based on partial orderings and present a new summary metric designed to balance existing automatic indicators, providing a more holistic evaluation of text generation quality. Furthermore, we discuss the alignment of these approaches with human judgments. Our experiments demonstrate that the proposed methods offer a robust way to compare decoding strategies, exhibit similarities with human preferences, and serve as valuable tools in guiding model selection for open-ended text generation tasks. Finally, we suggest future directions for improving evaluation methodologies in text generation. Our codebase, datasets, and models are publicly available.

MCML Authors

Language Model Re-rankers are Fooled by Lexical Similarities.

FEVER @ACL 2025 - 8th Fact Extraction and VERification Workshop at the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Language model (LM) re-rankers are used to refine retrieval results for retrieval-augmented generation (RAG). They are more expensive than lexical matching methods like BM25 but assumed to better process semantic information and the relations between the query and the retrieved answers. To understand whether LM re-rankers always live up to this assumption, we evaluate 6 different LM re-rankers on the NQ, LitQA2 and DRUID datasets. Our results show that LM re-rankers struggle to outperform a simple BM25 baseline on DRUID. Leveraging a novel separation metric based on BM25 scores, we explain and identify re-ranker errors stemming from lexical dissimilarities. We also investigate different methods to improve LM re-ranker performance and find these methods mainly useful for NQ. Taken together, our work identifies and explains weaknesses of LM re-rankers and points to the need for more adversarial and realistic datasets for their evaluation.

MCML Authors

XCOMPS: A Multilingual Benchmark of Conceptual Minimal Pairs.

SIGTYP @ACL 2025 - 7th Workshop on Research in Computational Linguistic Typology and Multilingual NLP at the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

We introduce XCOMPS in this work, a multilingual conceptual minimal pair dataset covering 17 languages. Using this dataset, we evaluate LLMs’ multilingual conceptual understanding through metalinguistic prompting, direct probability measurement, and neurolinguistic probing. By comparing base, instruction-tuned, and knowledge-distilled models, we find that: 1) LLMs exhibit weaker conceptual understanding for low-resource languages, and accuracy varies across languages despite being tested on the same concept sets. 2) LLMs excel at distinguishing concept-property pairs that are visibly different but exhibit a marked performance drop when negative pairs share subtle semantic similarities. 3) Instruction tuning improves performance in concept understanding but does not enhance internal competence; knowledge distillation can enhance internal competence in conceptual understanding for low-resource languages with limited gains in explicit task performance. 4) More morphologically complex languages yield lower concept understanding scores and require deeper layers for conceptual reasoning.

MCML Authors

Mind the Gap: Gender-based Differences in Occupational Embeddings.

GeBNLP @ACL 2025 - 6th Workshop on Gender Bias in Natural Language Processing at the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Large Language Models (LLMs) offer promising alternatives to traditional occupational coding approaches in survey research. Using a German dataset, we examine the extent to which LLM-based occupational coding differs by gender. Our findings reveal systematic disparities: gendered job titles (e.g., “Autor” vs. “Autorin”, meaning “male author” vs. “female author”) frequently result in diverging occupation codes,

even when semantically identical. Across all models, 54%–82% of gendered inputs obtain different Top-5 suggestions. The practical impact, however, depends on the model. GPT includes the correct code most often (62%) but demonstrates female bias (up to +18 pp). IBM is less accurate (51%) but largely balanced. Alibaba, Gemini, and MiniLM achieve about 50% correct-code inclusion, and their small (< 10 pp) and direction-flipping gaps could indicate a sampling noise rather than gender bias. We discuss these findings in the context of fairness and reproducibility in NLP applications for social data.

MCML Authors

In-Context Learning of Soft Nearest Neighbor Classifiers for Intelligible Tabular Machine Learning.

TRL @ACL 2025 - 4th Table Representation Learning Workshop at the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

With in-context learning foundation models like TabPFN excelling on small supervised tabular learning tasks, it has been argued that ‘boosted trees are not the best default choice when working with data in tables’. However, such foundation models are inherently black-box models that do not provide interpretable predictions. We introduce a novel learning task to train ICL models to act as a nearest neighbor algorithm, which enables intelligible inference and does not decrease performance empirically.

MCML Authors

Matthias Feurer

Prof. Dr.

Thomas Bayes Fellow

* Former Thomas Bayes Fellow

From Knowledge to Noise: CTIM-Rover and the Pitfalls of Episodic Memory in Software Engineering Agents.

REALM @ACL 2025 - 1st Workshop for Research on Agent Language Models at the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

We introduce CTIM-Rover, an AI agent for Software Engineering (SE) built on top of AutoCodeRover (Zhang et al., 2024) that extends agentic reasoning frameworks with an episodic memory, more specifically, a general and repository-level Cross-Task-Instance Memory (CTIM). While existing open-source SE agents mostly rely on ReAct (Yao et al., 2023b), Reflexion (Shinn et al., 2023), or Code-Act (Wang et al., 2024), all of these reasoning and planning frameworks inefficiently discard their long-term memory after a single task instance. As repository-level understanding is pivotal for identifying all locations requiring a patch for fixing a bug, we hypothesize that SE is particularly well positioned to benefit from CTIM. For this, we build on the Experiential Learning (EL) approach ExpeL (Zhao et al., 2024), proposing a Mixture-Of-Experts (MoEs) inspired approach to create both a general-purpose and repository-level CTIM. We find that CTIM-Rover does not outperform AutoCodeRover in any configuration and thus conclude that neither ExpeL nor DoT-Bank (Lingam et al., 2024) scale to real-world SE problems. Our analysis indicates noise introduced by distracting CTIM items or exemplar trajectories as the likely source of the performance degradation.

MCML Authors

Construction-Based Reduction of Translationese for Low-Resource Languages: A Pilot Study on Bavarian.

SIGTYP @ACL 2025 - 7th Workshop on Research in Computational Linguistic Typology and Multilingual NLP at the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL GitHub

Abstract

When translating into a low-resource language, a language model can have a tendency to produce translations that are close to the source (e.g., word-by-word translations) due to a lack of rich low-resource training data in pretraining. Thus, the output often is translationese that differs considerably from what native speakers would produce naturally. To remedy this, we synthetically create a training set in which the frequency of a construction unique to the low-resource language is artificially inflated. For the case of Bavarian, we show that, after training, the language model has learned the unique construction and that native speakers judge its output as more natural. Our pilot study suggests that construction-based mitigation of translationese is a promising approach.

MCML Authors

HYPEROFA: Expanding LLM Vocabulary to New Languages via Hypernetwork-Based Embedding Initialization.

SRW @ACL 2025 - Student Research Workshop at the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Many pre-trained language models (PLMs) exhibit suboptimal performance on mid- and low-resource languages, largely due to limited exposure to these languages during pre-training. A common strategy to address this is to introduce new tokens specific to the target languages, initialize their embeddings, and apply continual pre-training on target-language data. Among such methods, OFA (Liu et al., 2024a) proposes a similarity-based subword embedding initialization heuristic that is both effective and efficient. However, OFA restricts target-language token embeddings to be convex combinations of a fixed number of source-language embeddings, which may limit expressiveness. To overcome this limitation, we propose HYPEROFA, a hypernetwork-based approach for more adaptive token embedding initialization. The hypernetwork is trained to map from an external multilingual word vector space to the PLMs token embedding space using source-language tokens. Once trained, it can generate flexible embeddings for target-language tokens, serving as a good starting point for continual pretraining. Experiments demonstrate that HYPEROFA consistently outperforms random initialization baseline and matches or exceeds the performance of OFA in both continual pre-training convergence and downstream task performance. We make the code publicly available.

MCML Authors

Do LLMs Give Psychometrically Plausible Responses in Educational Assessments?

BEA @ACL 2025 - 20th Workshop on Innovative Use of NLP for Building Educational Applications at the 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. URL

Abstract

Knowing how test takers answer items in educational assessments is essential for test development, to evaluate item quality, and to improve test validity. However, this process usually requires extensive pilot studies with human participants. If large language models (LLMs) exhibit human-like response behavior to test items, this could open up the possibility of using them as pilot participants to accelerate test development. In this paper, we evaluate the human-likeness or psychometric plausibility of responses from 18 instruction-tuned LLMs with two publicly available datasets of multiple-choice test items across three subjects: reading, U.S. history, and economics. Our methodology builds on two theoretical frameworks from psychometrics which are commonly used in educational assessment, classical test theory and item response theory. The results show that while larger models are excessively confident, their response distributions can be more human-like when calibrated with temperature scaling. In addition, we find that LLMs tend to correlate better with humans in reading comprehension items compared to other subjects. However, the correlations are not very strong overall, indicating that LLMs should not be used for piloting educational assessments in a zero-shot setting.

MCML Authors

#research #top-tier-work #bischl #feurer #fraser #hedderich #kreuter #plank #schuetze #tresp

Related

16.10.2025

SIC: Making AI Image Classification Understandable

SIC by the team of Christian Wachinger at ICCV 2025: Transparent AI for intuitive, reliable, and interpretable medical image classification.

09.10.2025

Rethinking AI in Public Institutions - Balancing Prediction and Capacity

Unai Fischer Abaigar explores how AI can make public decisions fairer, smarter, and more effective.

08.10.2025

MCML-LAMARR Workshop at University of Bonn

MCML and Lamarr researchers met in Bonn to exchange ideas on NLP, LLM finetuning, and AI ethics.

08.10.2025

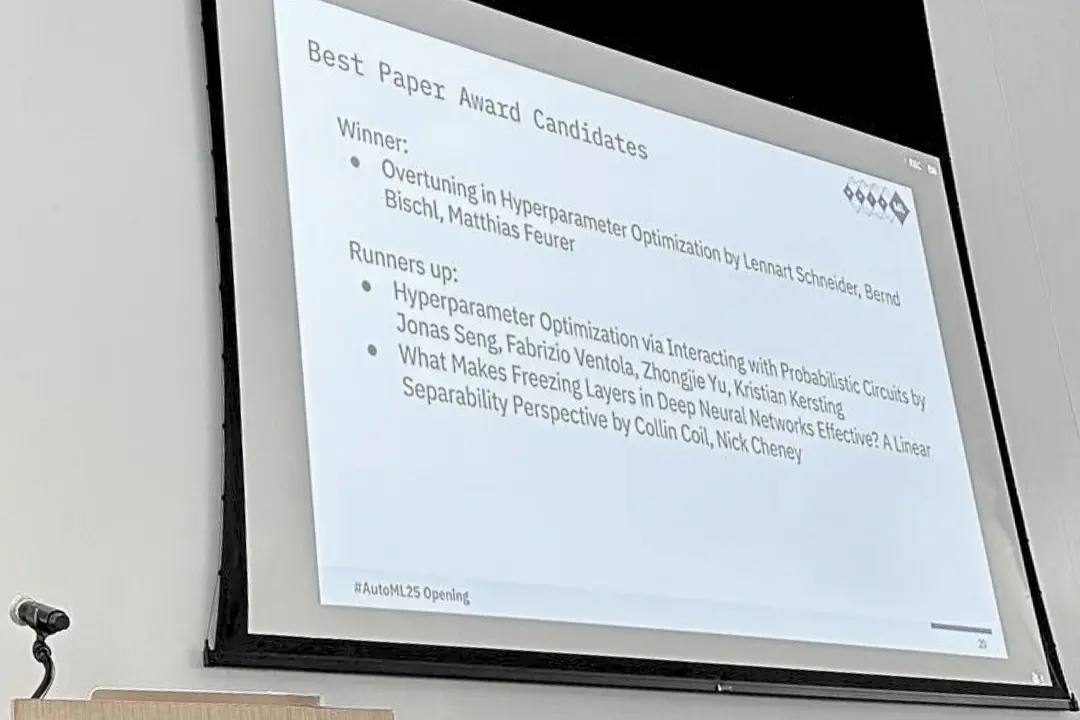

Three MCML Members Win Best Paper Award at AutoML 2025

Former MCML TBF Matthias Feurer and Director Bernd Bischl’s paper on overtuning won Best Paper at AutoML 2025, offering insights for robust HPO.