29.01.2026

How Machines Can Discover Hidden Rules Without Supervision

MCML Research Insight - With Tobias Schmidt, and Steffen Schneider

How can machines learn the hidden rules that govern how systems change—how objects move, how weather patterns unfold, or how biological signals evolve—without ever being told what those rules are?

«We proposed a first identifiable, end-to-end, non-generative inference algorithm for latent switching dynamics along with an empirically successful parameterization of non-linear dynamics.»

Tobias Schmidt

MCML Junior Member

New research from MCML Junior Member Tobias Schmidt, and MCML PI Steffen Schneider together with first author Rodrigo González Laiz shows that this is possible. Their ICLR 2025 paper demonstrates that self-supervised contrastive learning can uncover the latent dynamics of complex systems, even when the observations are high-dimensional and unlabeled.

Their framework, Dynamics Contrastive Learning (DCL), reveals that contrastive learning—which is already widely used for images, audio, and text—can also perform non-linear system identification: learning a system’s hidden state and its transition rules directly from raw data.

Learning Hidden Dynamics – Two Angles on the Contribution

(1) Bridging Contrastive Learning and System Identification

Contrastive learning has become a central tool for building representations from images, speech, and many other data types. Yet its ability to reveal the underlying dynamical rules of time series has remained an open question.

This work shows that when contrastive learning is paired with a future-prediction step in latent space, the model can recover both the latent state and the transition function without supervision. Under well-defined assumptions, the learned representation aligns with the true hidden variables up to a linear transformation, and the learned dynamics reflect how those variables truly evolve.

In short: contrastive learning can do more than represent sequences, it can identify the dynamical systems behind them.

(2) Simplifying System Identification via Encoder-Only Learning

Many classical system identification techniques rely on generative modeling: they must describe how latent states produce observations. For rich data such as videos, neural recordings, or molecular simulations, this is often the hardest part. DCL avoids this bottleneck entirely, using an encoder-only approach to extract latent states, and a dynamics model that predicts how these latents evolve.

There is no decoder and no need to model the generative process explicitly. Despite this simplicity, the theoretical results guarantee identifiability of both the latent space and the dynamics up to an affine transform.

«The DCL framework is versatile and allows to study a wide range of natural phenomena and to describe the underlying rules that govern them.»

Tobias Schmidt

MCML Junior Member

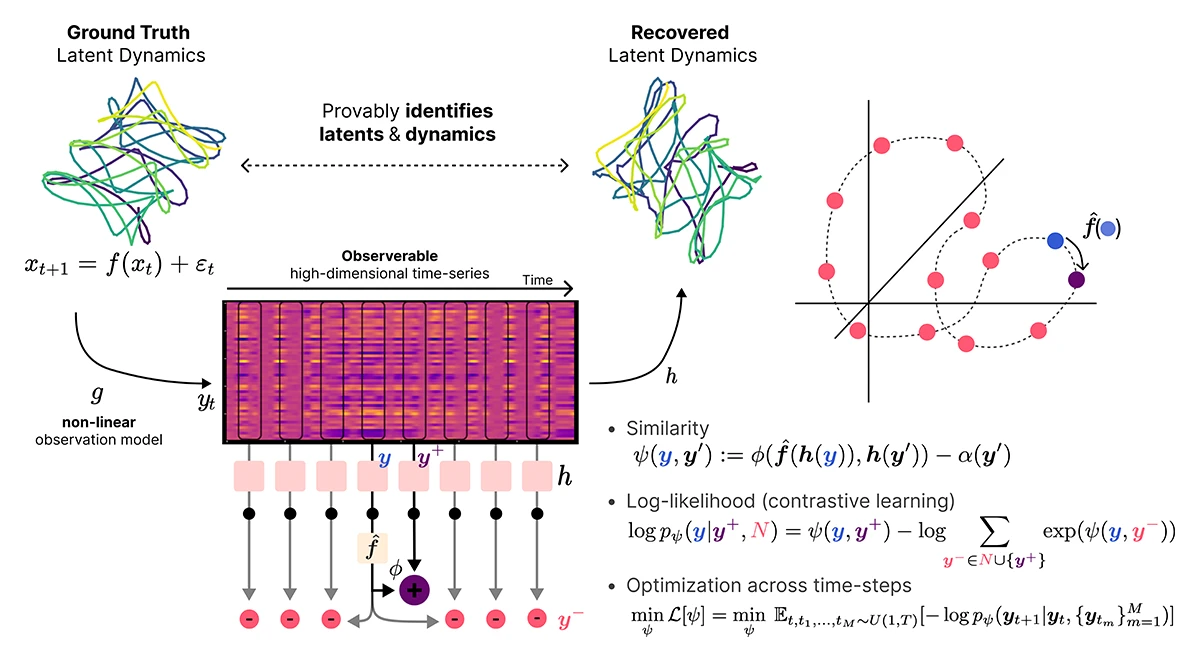

The Dynamics Contrastive Learning (DCL) Framework

DCL extends standard contrastive learning by placing a dynamics model directly inside the contrastive loss.

- An encoder maps each observation into a latent state.

- A dynamics model predicts the next latent state from the current one.

- A contrastive objective ensures predicted latents match the future latents from real data, while being distinct from negative samples.

Through this joint training, the model learns both, a meaningful latent representation of the system and the rules that govern how these representations evolve.

Flexible Dynamics Models

DCL supports a range of possible dynamics models:

- Linear dynamics using a single linear transformation

- Switching dynamics via a bank of linear models with a smooth mechanism that selects which regime applies at each moment

- Non-linear or chaotic dynamics approximated as sequences of locally linear transitions

This flexibility allows DCL to scale from simple stable systems to highly non-linear trajectories without ever modeling the raw observations directly.

©Laiz et al.

Figure 1: Contrastive learning of dynamical non-linear systems

Why This Matters

This work helps explain why self-supervised learning often succeeds on sequential or temporal data: This work helps explain why self-supervised approaches often succeed on temporal data: they are not only learning embeddings, but also implicitly capturing the system’s temporal structure. With DCL, this ability becomes explicit and theoretically grounded. It leverages time information without relying on additional labels and learns the latent space and the suitable dynamics in an end-to-end identifiable way, opening new possibilities for scientific discovery, control, and data-driven modeling.

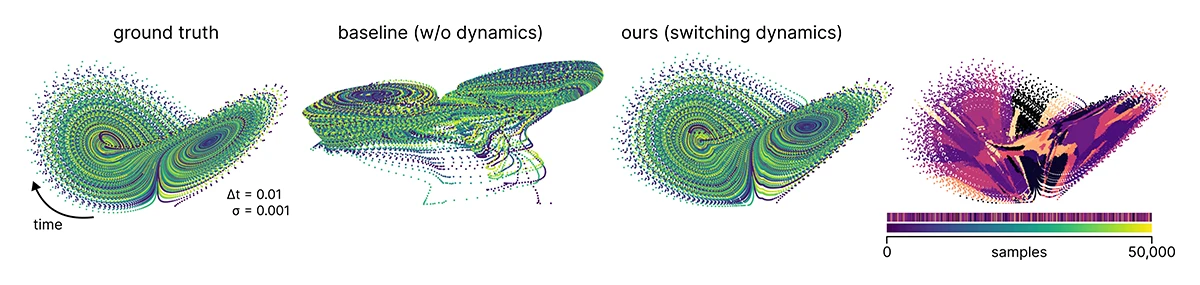

©Laiz et al.

Figure 2: DCL recovers non-linear latent dynamics

DCL in Action

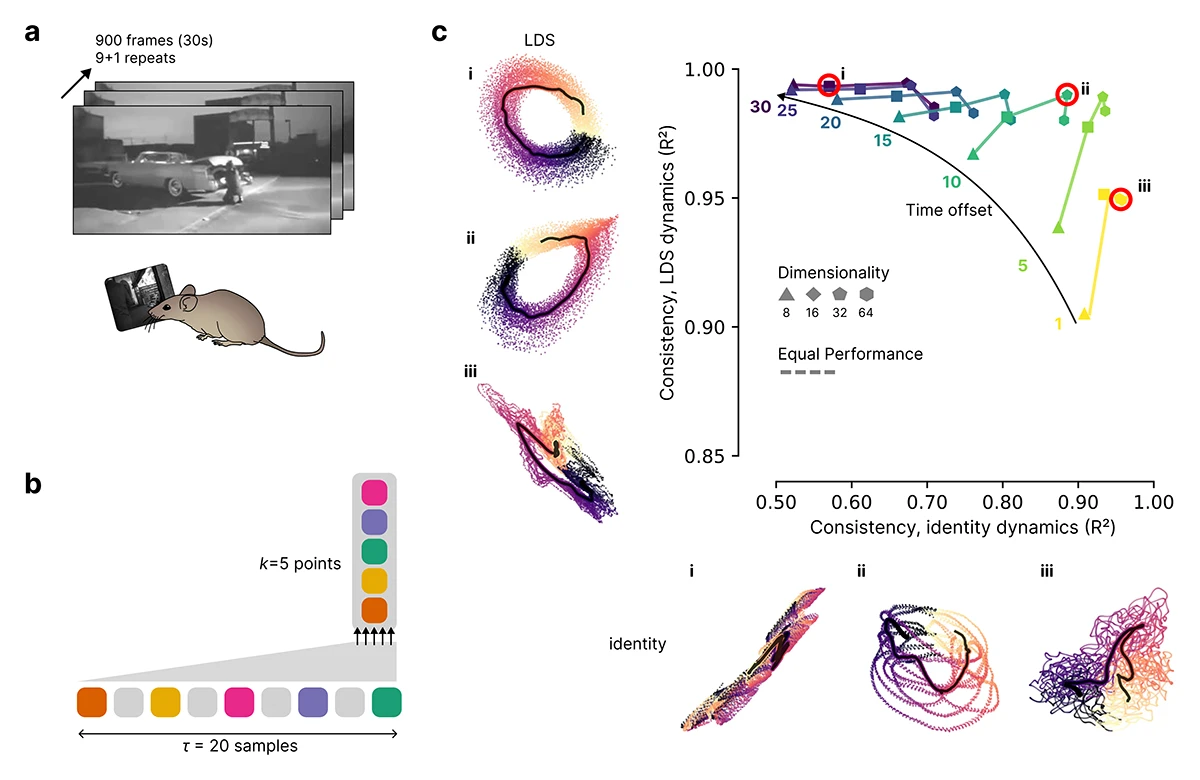

The authors demonstrate DCL on a range of datasets, including real neural population recordings collected during sensory stimulation. Even in noisy biological settings, the model extracts low-dimensional latent trajectories that reflect coherent, stimulus-locked dynamics—something that encoder-only contrastive methods without an explicit dynamics model cannot reliably capture.

This illustrates DCL’s practical value: it can uncover structure in complex systems where the generative process is unknown or too difficult to model, while remaining simple to train.

©Laiz et al.

Figure 3: Analysis of dynamics in visual cortex during a movie stimulus

Further Reading & Reference

While this article introduces the key ideas, the original paper explores the full theory behind how contrastive learning can recover hidden dynamics, along with extensive experiments across linear, switching, and non-linear systems.

If you curios about the DYNCL framework, the mathematical guarantees, and the empirical results, check out the original paper presented at the A* conference ICLR 2025, one of the leading international conferences in artificial intelligence.

Self-supervised contrastive learning performs non-linear system identification.

ICLR 2025 - 13th International Conference on Learning Representations. Singapore, Apr 24-28, 2025. URL GitHub

Share Your Research!

Get in touch with us!

Are you an MCML Junior Member and interested in showcasing your research on our blog?

We’re happy to feature your work—get in touch with us to present your paper.

Related

05.02.2026

Needle in a Haystack: Finding Exact Moments in Long Videos

ECCV 2024 research introduces RGNet, an AI model that finds exact moments in long videos using unified retrieval and grounding.

04.02.2026

Benjamin Busam Leads Design of Bavarian Earth Observation Satellite Network “CuBy”

Benjamin Busam leads the scientific design of the “CuBy” satellite network, delivering AI-ready Earth observation data for Bavaria.

©Florian Generotzky / LMU

30.01.2026

Cracks in the Foundations of Cosmology

Daniel Grün examines cosmological tensions that challenge the Standard Model and may point toward new physics.