11.12.2025

From Sitting Dog to Standing: A New Way to Morph 3D Shapes

MCML Research Insight - With Lu Sang and Daniel Cremers

Ever wondered how a 3D shape can smoothly change — like a robot arm bending or a dog rising from sitting to standing — without complex simulations or hand-crafted data? Researchers from MCML and the University of Bonn tackled this challenge in their ICLR 2025 paper, “Implicit Neural Surface Deformation with Explicit Velocity Fields”.

MCML Junior Member Lu Sang, our Director Daniel Cremers, together with Zehranaz Canfes, Dongliang Cao, and Florian Bernard — presents a method that combines neural implicit surfaces with explicit velocity fields to model continuous, physics-aware 3D deformations directly between point clouds. The result: smoother and more realistic shape transformations, with potential applications in robotics, computer vision, and graphics.

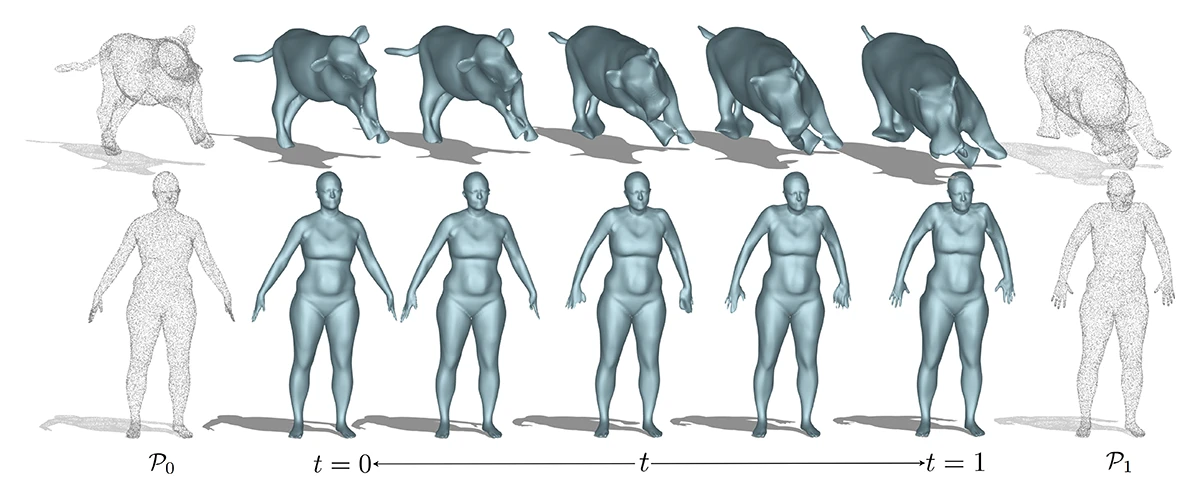

©Sang et al.

Figure 1: Visualization of smooth, physically plausible 3D shape deformation between two point clouds. Given only a source point cloud P0 and a target point cloud P1, the method predicts a continuous sequence of intermediate shapes over time ttt, producing natural transitions between rigid and non-rigid configurations.

«We adopt neural implicit surface representations and tackle the challenging problem of directly deforming the implicit field while recovering physically plausible intermediate shapes – without any rendering or intermediate ground truth supervision.»

Lu Sang

MCML Junior Member

Modeling Deformations: From Mesh Constraints to Implicit Velocity Fields

3D shapes can be represented in two main ways: as meshes, with fixed points and connections, or as implicit fields, continuous mathematical representations like signed distance functions. Meshes make it easy to manipulate shapes locally, but they struggle with complex changes like splitting, merging, or missing parts. Implicit representations handle these topological changes naturally, but they lack explicit point positions and neighborhood information, making smooth, physically realistic deformations hard to achieve.

To overcome this, the authors introduced a velocity network that predicts a continuous, divergence-free velocity field. Think of it like each point on the surface flowing smoothly, almost like particles in a fluid, guiding the shape from one pose to another while keeping the motion physically plausible. By combining implicit surfaces with these motion fields, they bridge the gap between flexibility and physical realism in 3D deformations.

How Does It Work?

The method works by letting two networks “team up” to transform one 3D shape into another:

- Velocity Network (Velocity-Net) predicts how every point on the shape should move over time — like particles flowing in a gentle stream. The network ensures that the object keeps its overall volume while moving.

- Implicit Network (Implicit-Net) describes the surface itself as a continuous mathematical field, capturing the shape’s geometry without relying on fixed points or meshes.

Together, these networks learn physically plausible deformations directly from two point clouds. The process is end-to-end differentiable, fully unsupervised, and doesn’t rely on intermediate shapes or pre-trained models.

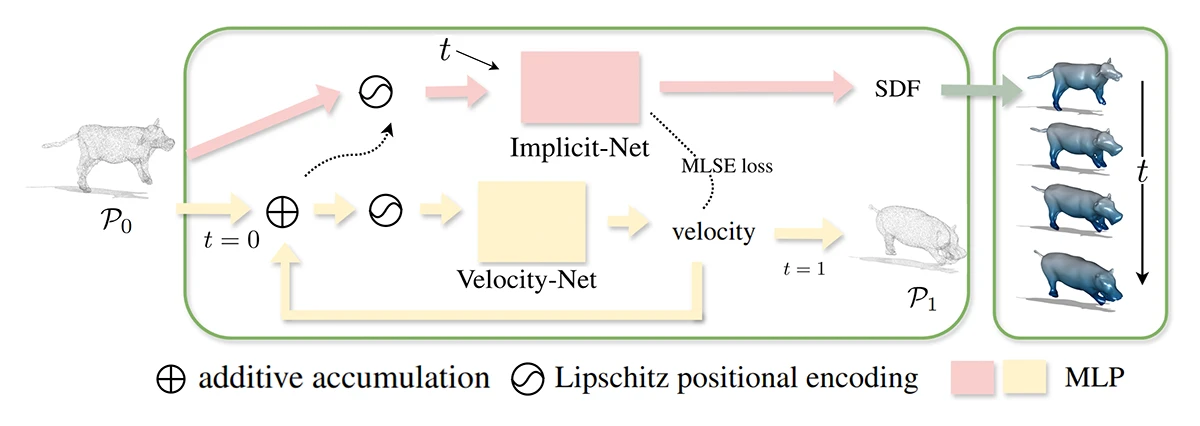

©Sang et al.

Figure 2: Overview of the proposed framework for implicit neural surface deformation. Given two point clouds P0 and P1,, the method jointly trains two neural networks: a Velocity-Net that predicts a smooth, divergence-free velocity field guiding the deformation, and an Implicit-Net that represents the SDF. The deformation is modeled through the Modified Level-Set Equation (MLSE), ensuring physically plausible and stable surface evolution over time.

Results and Highlights

The method was tested on benchmark datasets covering both rigid and flexible transformations, including FAUST, SMAL, SHREC16, and DeformingThings4D. It consistently outperformed previous approaches, producing smoother, more physically consistent intermediate shapes while being faster and more efficient.

«Our method directly deforms the implicit field using an explicitly estimated velocity field, enabling us to estimate deformations directly from the point cloud input.»

Lu Sang

MCML Junior Member

Open Challenges

Large deformations remain challenging due to the lack of explicit neighborhood information. In these cases, the deformations may be less physically accurate.

Why It Matters

The goal is to extend this approach to continuous, real-time shape evolution. By combining physics-aware modeling with data-efficient learning, this method opens the door to more realistic and flexible 3D transformations. Applications range from animation and robotics to medical simulation, enabling advanced modeling without dense meshes or detailed supervision.

Want to Explore Further?

Presented at ICLR 2025, a top-tier machine learning conference, the full paper provides a more in-depth exploration of the potential of this new method and technology, laying the foundations for future advancements in 3-D modeling and deformation.

Implicit Neural Surface Deformation with Explicit Velocity Fields.

ICLR 2025 - 13th International Conference on Learning Representations. Singapore, Apr 24-28, 2025. URL GitHub

Share Your Research!

Get in touch with us!

Are you an MCML Junior Member and interested in showcasing your research on our blog?

We’re happy to feature your work—get in touch with us to present your paper.

Related

24.02.2026

Cosmology: Measuring the Expansion of the Universe With Cosmic Fireworks

Daniel Gruen leads LMU’s campaign on rare SN Winny to refine the Hubble constant and address the Hubble tension in cosmology.

19.02.2026

COSMOS – Teaching Vision-Language Models to Look Beyond the Obvious

Presented at CVPR 2025, COSMOS shows how smarter training helps VLMs learn from details and context, improving AI understanding without larger models.

05.02.2026

Daniel Rückert and Fabian Theis Awarded Google.org AI for Science Grant

Daniel Rueckert and Fabian Theis receive Google.org AI funding to develop multiscale AI models for biomedical disease simulation.