26.10.2025

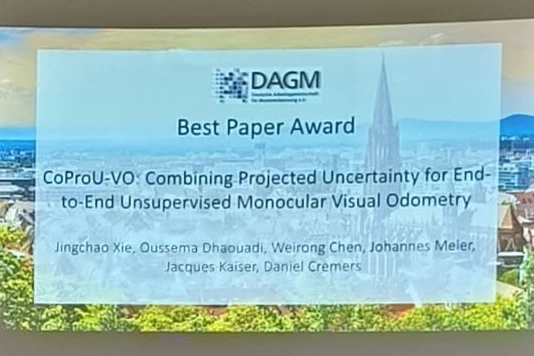

CoProU-VO Wins GCPR 2025 Best Paper Award

Award-Winning Work by MCML Director Daniel Cremers and His Team for Advances in Unsupervised Visual Odometry

The paper “CoProU-VO: Combining Projected Uncertainty for End-to-End Unsupervised Monocular Visual Odometry” by MCML Director Daniel Cremers and Junior Members Weirong Chen and Johannes Meier, together with Jingchao Xie, Oussema Dhaouadi, and Jacques Kaiser received the Best Paper Award at GCPR 2025.

Their work presents CoProU-VO, an end-to-end unsupervised visual odometry framework that propagates and combines uncertainty across temporal frames to improve robustness in dynamic scenes. Built on vision transformer backbones, it jointly learns depth, uncertainty, and camera poses, achieving state-of-the-art performance on KITTI and nuScenes datasets.

Congratulations to the team on this outstanding achievement!

Check out the full paper:

CoProU-VO: Combining Projected Uncertainty for End-to-End Unsupervised Monocular Visual Odometry.

GCPR 2025 - German Conference on Pattern Recognition. Freiburg, Germany, Oct 23-26, 2025. Best Paper Award. DOI

Related

24.02.2026

Cosmology: Measuring the Expansion of the Universe With Cosmic Fireworks

Daniel Gruen leads LMU’s campaign on rare SN Winny to refine the Hubble constant and address the Hubble tension in cosmology.

19.02.2026

COSMOS – Teaching Vision-Language Models to Look Beyond the Obvious

Presented at CVPR 2025, COSMOS shows how smarter training helps VLMs learn from details and context, improving AI understanding without larger models.

05.02.2026

Daniel Rückert and Fabian Theis Awarded Google.org AI for Science Grant

Daniel Rueckert and Fabian Theis receive Google.org AI funding to develop multiscale AI models for biomedical disease simulation.