19.09.2025

MCML at MICCAI 2025: 39 Accepted Papers (25 Main, and 14 Workshops)

28th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI 2025). Daejeon, Republic of Korea, 23.09.2025–27.09.2025

We are happy to announce that MCML researchers have contributed a total of 39 papers to MICCAI 2025: 25 Main, and 14 Workshop papers. Congrats to our researchers!

Main Track (25 papers)

HieraSurg: Hierarchy-Aware Diffusion Model for Surgical Video Generation.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Surgical Video Synthesis has emerged as a promising research direction following the success of diffusion models in general-domain video generation. Although existing approaches achieve high-quality video generation, most are unconditional and fail to maintain consistency with surgical actions and phases, lacking the surgical understanding and fine-grained guidance necessary for factual simulation. We address these challenges by proposing HieraSurg, a hierarchy-aware surgical video generation framework consisting of two specialized diffusion models. Given a surgical phase and an initial frame, HieraSurg first predicts future coarse-grained semantic changes through a segmentation prediction model. The final video is then generated by a second-stage model that augments these temporal segmentation maps with fine-grained visual features, leading to effective texture rendering and integration of semantic information in the video space. Our approach leverages surgical information at multiple levels of abstraction, including surgical phase, action triplets, and panoptic segmentation maps. The experimental results on Cholecystectomy Surgical Video Generation demonstrate that the model significantly outperforms prior work both quantitatively and qualitatively, showing strong generalization capabilities and the ability to generate higher frame-rate videos. The model exhibits particularly fine-grained adherence when provided with existing segmentation maps, suggesting its potential for practical surgical applications.

MCML Authors

X-SiT: Inherently Interpretable Surface Vision Transformers for Dementia Diagnosis.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Interpretable models are crucial for supporting clinical decision-making, driving advances in their development and application for medical images. However, the nature of 3D volumetric data makes it inherently challenging to visualize and interpret intricate and complex structures like the cerebral cortex. Cortical surface renderings, on the other hand, provide a more accessible and understandable 3D representation of brain anatomy, facilitating visualization and interactive exploration. Motivated by this advantage and the widespread use of surface data for studying neurological disorders, we present the eXplainable Surface Vision Transformer (X-SiT). This is the first inherently interpretable neural network that offers human-understandable predictions based on interpretable cortical features. As part of X-SiT, we introduce a prototypical surface patch decoder for classifying surface patch embeddings, incorporating case-based reasoning with spatially corresponding cortical prototypes. The results demonstrate state-of-the-art performance in detecting Alzheimer’s disease and frontotemporal dementia while additionally providing informative prototypes that align with known disease patterns and reveal classification errors.

MCML Authors

Predicting Longitudinal Brain Development via Implicit Neural Representations.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Predicting individualized perinatal braindevelopment is crucial for understanding personalized neurodevelopmental trajectories, however, remains challenging due to limited longitudinal data. While popu-ation based atlases model generic trends, they fail to capture subject-specific growth patterns. In this work, we propose a novel approach leveraging Implicit Neural Representations (INRs) to predict individualized brain growth over multiple weeks. Our method learns from a limited dataset of less than 100 paired fetal and neonatal subjects, sampled from the developing Human Connectome Project. The trained model demonstrates accurate personalized future and past trajectory predictions from a single calibration scan. By incorporating conditional external factors such as birth age or birth weight, our model further allows the simulation of neurodevelopment under varying conditions. We evaluate our method against established perinatal brain atlases, demonstrating higher prediction accuracy and fidelity up to 20 weeks. Finally, we explore the method’s ability to reveal subject-specific cortical folding patterns under varying factors like birth weight, further advocating its potential for

personalized neurodevelopmental analysis.

MCML Authors

CytoSAE: Interpretable Cell Embeddings for Hematology.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI GitHub

Abstract

Sparse autoencoders (SAEs) emerged as a promising tool for mechanistic interpretability of transformer-based foundation models. Very recently, SAEs were also adopted for the visual domain, enabling the discovery of visual concepts and their patch-wise attribution to tokens in the transformer model. While a growing number of foundation models emerged for medical imaging, tools for explaining their inferences are still lacking. In this work, we show the applicability of SAEs for hematology. We propose CytoSAE, a sparse autoencoder which is trained on over 40,000 peripheral blood single-cell images. CytoSAE generalizes to diverse and out-of-domain datasets, including bone marrow cytology, where it identifies morphologically relevant concepts which we validated with medical experts. Furthermore, we demonstrate scenarios in which CytoSAE can generate patient-specific and disease-specific concepts, enabling the detection of pathognomonic cells and localized cellular abnormalities at the patch level. We quantified the effect of concepts on a patient-level AML subtype classification task and show that CytoSAE concepts reach performance comparable to the state-of-the-art, while offering explainability on the sub-cellular level.

MCML Authors

UltraRay: Introducing Full-Path Ray Tracing in Physics-Based Ultrasound Simulation.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Traditional ultrasound simulators solve the wave equation to model pressure distribution fields, achieving physical accuracy but requiring significant computational time and resources. Ray tracing approaches have been introduced to address this limitation, modeling wave propagation as rays interacting with boundaries and scatterers. However, existing models simplify ray propagation, generating echoes at interaction points without considering return paths to the sensor. This can result in undesired artifacts and necessitates careful scene tuning for plausible results. We propose UltraRay, a novel framework that models the full path of acoustic waves reflecting from tissue boundaries. We derive the equations for accurate reflection modeling across multiple interaction points and introduce a sampling strategy for an increased likelihood of a ray returning to the transducer. By incorporating a ray emission scheme for plane wave imaging and a standard signal processing pipeline for beamforming, we are able to simulate the ultrasound image formation process end-to-end. Built on a differentiable modular framework, UltraRay introduces an extendable foundation for differentiable ultrasound simulation based on full-path ray tracing. We demonstrate its advantages compared to the state-of-the-art ray tracing ultrasound simulation, shown both on a synthetic scene and a spine phantom.

MCML Authors

ICE-PoGO: Improving Dynamic Panoramic Reconstruction of 4D ICE Imaging through Pose Graph Optimization.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Intracardiac echocardiography (ICE) has the potential to play a crucial role in structural heart disease (SHD) interventions by providing high-quality imaging in real time, without many of the key drawbacks of established imaging modalities. However, ICE’s limited field-of-view (FoV) requires continuous readjustments of the catheter position to fully visualize the dynamic cardiac environment, which impairs spatial navigation and increases procedure time and complexity. Dynamic panoramic reconstruction can mitigate this limitation. However, state-of-the-art methods depend on precise catheter tracking, the accuracy of which is affected by the presence of noise and anatomical motion. While registration can correct these errors, existing approaches are computationally prohibitive for large imaging volumes due to repeated iterations over image data, further amplified by the added time dimension. To address these challenges, we present a novel method for truly dynamic panoramic reconstruction by leveraging the repetitive nature of cardiac motion under a cyclic environment assumption. To our knowledge, our method is the first to employ dynamic pose graph optimization (PGO) specifically designed for 4D ICE tracking. Our results demonstrate enhanced tracking accuracy and improved panoramic reconstruction quality, potentially providing real-time, dynamic anatomical guidance for clinicians. The improved alignment of overlapping ICE volumes and increased temporal tracking resolution represent a substantial advancement in 4D ICE imaging, enhancing navigation and decision-making during complex cardiac interventions.

MCML Authors

CAT-SG: A Large Dynamic Scene Graph Dataset for Fine-Grained Understanding of Cataract Surgery.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI GitHub

Abstract

Understanding the intricate workflows of cataract surgery requires modeling complex interactions between surgical tools, anatomical structures, and procedural techniques. Existing datasets primarily address isolated aspects of surgical analysis, such as tool detection or phase segmentation, but lack comprehensive representations that capture the semantic relationships between entities over time. This paper introduces the Cataract Surgery Scene Graph (CAT-SG) dataset, the first to provide structured annotations of tool-tissue interactions, procedural variations, and temporal dependencies. By incorporating detailed semantic relations, CAT-SG offers a holistic view of surgical workflows, enabling more accurate recognition of surgical phases and techniques. Additionally, we present a novel scene graph generation model, CatSGG, which outperforms current methods in generating structured surgical representations. The CAT-SG dataset is designed to enhance AI-driven surgical training, real-time decision support, and workflow analysis, paving the way for more intelligent, context-aware systems in clinical practice.

MCML Authors

PRADA: Protecting and Detecting Dataset Abuse for Open-source Medical Dataset.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Open-source datasets play a crucial role in data-centric AI, particularly in the medical field, where data collection and access are often restricted. While these datasets are typically opened for research or educational purposes, their unauthorized use for model training remains a persistent ethical and legal concern. In this paper, we propose PRADA, a novel framework for detecting whether a Deep Neural Network (DNN) has been trained on a specific open-source dataset. The main idea of our method is exploiting the memorization ability of DNN and designing a hidden signal—a carefully optimized signal that is imperceptible to humans yet covertly memorized in the models. Once the hidden signal is generated, it is embedded into a dataset and makes protected data, which is then released to the public. Any model trained on this protected data will inherently memorize the characteristics of hidden signals. Then, by analyzing the response of the model on the hidden signal, we can identify whether the dataset was used during training. Furthermore, we propose the Exposure Frequency-Accuracy Correlation (EFAC) score to verify whether a model has been trained on protected data or not. It quantifies the correlation between the predefined exposure frequency of the hidden signal, set by the data provider, and the accuracy of models. Experiments demonstrate that our approach effectively detects whether the model is trained on a specific dataset or not. This work provides a new direction for protecting open-source datasets from misuse in medical AI research.

MCML Authors

HyperSORT: Self-Organising Robust Training with hyper-networks.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI GitHub

Abstract

Medical imaging datasets often contain heterogeneous biases ranging from erroneous labels to inconsistent labeling styles. Such biases can negatively impact deep segmentation networks performance. Yet, the identification and characterization of such biases is a particularly tedious and challenging task. In this paper, we introduce HyperSORT, a framework using a hyper-network predicting UNets’ parameters from latent vectors representing both the image and annotation variability. The hyper-network parameters and the latent vector collection corresponding to each data sample from the training set are jointly learned. Hence, instead of optimizing a single neural network to fit a dataset, HyperSORT learns a complex distribution of UNet parameters where low density areas can capture noise-specific patterns while larger modes robustly segment organs in differentiated but meaningful manners. We validate our method on two 3D abdominal CT public datasets: first a synthetically perturbed version of the AMOS dataset, and TotalSegmentator, a large scale dataset containing real unknown biases and errors. Our experiments show that HyperSORT creates a structured mapping of the dataset allowing the identification of relevant systematic biases and erroneous samples. Latent space clusters yield UNet parameters performing the segmentation task in accordance with the underlying “learned” systematic bias.

MCML Authors

Temporal Neural Cellular Automata: Application to modeling of contrast enhancement in breast MRI.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI GitHub

Abstract

Synthetic contrast enhancement offers fast image acquisition and eliminates the need for intravenous injection of contrast agent. This is particularly beneficial for breast imaging, where long acquisition times and high cost are significantly limiting the applicability of magnetic resonance imaging (MRI) as a widespread screening modality. Recent studies have demonstrated the feasibility of synthetic contrast generation. However, current state-of-the-art (SOTA) methods lack sufficient measures for consistent temporal evolution. Neural cellular automata (NCA) offer a robust and lightweight architecture to model evolving patterns between neighboring cells or pixels. In this work we introduce TeNCA (Temporal Neural Cellular Automata), which extends and further refines NCAs to effectively model temporally sparse, non-uniformly sampled imaging data. To achieve this, we advance the training strategy by enabling adaptive loss computation and define the iterative nature of the method to resemble a physical progression in time. This conditions the model to learn a physiologically plausible evolution of contrast enhancement. We rigorously train and test TeNCA on a diverse breast MRI dataset and demonstrate its effectiveness, surpassing the performance of existing methods in generation of images that align with ground truth post-contrast sequences.

MCML Authors

Semantic Scene Graph for Ultrasound Image Explanation and Scanning Guidance.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Understanding medical ultrasound imaging remains a long-standing challenge due to significant visual variability caused by differences in imaging and acquisition parameters. Recent advancements in large language models (LLMs) have been used to automatically generate terminology-rich summaries orientated to clinicians with sufficient physiological knowledge. Nevertheless, the increasing demand for improved ultrasound interpretability and basic scanning guidance among non-expert users, e.g., in point-of-care settings, has not yet been explored. In this study, we first introduce the scene graph (SG) for ultrasound images to explain image content to ordinary and provide guidance for ultrasound scanning. The ultrasound SG is first computed using a transformer-based one-stage method, eliminating the need for explicit object detection. To generate a graspable image explanation for ordinary, the user query is then used to further refine the abstract SG representation through LLMs. Additionally, the predicted SG is explored for its potential in guiding ultrasound scanning toward missing anatomies within the current imaging view, assisting ordinary users in achieving more standardized and complete anatomical exploration. The effectiveness of this SG-based image explanation and scanning guidance has been validated on images from the left and right neck regions, including the carotid and thyroid, across five volunteers. The results demonstrate the potential of the method to maximally democratize ultrasound by enhancing its interpretability and usability for ordinaries.

MCML Authors

HASD: Hierarchical Adaption for pathology Slide-level Domain-shift.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Domain shift is a critical problem for pathology AI as pathology data is heavily influenced by center-specific conditions. Current pathology domain adaptation methods focus on image patches rather than WSI, thus failing to capture global WSI features required in typical clinical scenarios. In this work, we address the challenges of slide-level domain shift by proposing a Hierarchical Adaptation framework for Slide-level Domain-shift (HASD). HASD achieves multi-scale feature consistency and computationally efficient slide-level domain adaptation through two key components: (1) a hierarchical adaptation framework that integrates a Domain-level Alignment Solver for feature alignment, a Slide-level Geometric Invariance Regularization to preserve the morphological structure, and a Patch-level Attention Consistency Regularization to maintain local critical diagnostic cues; and (2) a prototype selection mechanism that reduces computational overhead. We validate our method on two slide-level tasks across five datasets, achieving a 4.1% AUROC improvement in a Breast Cancer HER2 Grading cohort and a 3.9% C-index gain in a UCEC survival prediction cohort. Our method provides a practical and reliable slide-level domain adaption solution for pathology institutions, minimizing both computational and annotation costs.

MCML Authors

MM-DINOv2: Adapting Foundation Models for Multi-Modal Medical Image Analysis.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Vision foundation models like DINOv2 demonstrate remarkable potential in medical imaging despite their origin in natural image domains. However, their design inherently works best for uni-modal image analysis, limiting their effectiveness for multi-modal imaging tasks that are common in many medical fields, such as neurology and oncology. While supervised models perform well in this setting, they fail to leverage unlabeled datasets and struggle with missing modalities, a frequent challenge in clinical settings. To bridge these gaps, we introduce MM-DINOv2, a novel and efficient framework that adapts the pre-trained vision foundation model DINOv2 for multi-modal medical imaging. Our approach incorporates multi-modal patch embeddings, enabling vision foundation models to effectively process multi-modal imaging data. To address missing modalities, we employ full-modality masking, which encourages the model to learn robust cross-modality relationships. Furthermore, we leverage semi-supervised learning to harness large unlabeled datasets, enhancing both the accuracy and reliability of medical predictions. Applied to glioma subtype classification from multi-sequence brain MRI, our method achieves a Matthews Correlation Coefficient (MCC) of 0.6 on an external test set, surpassing state-of-the-art supervised approaches by +11.1%. Our work establishes a scalable and robust solution for multi-modal medical imaging tasks, leveraging powerful vision foundation models pre-trained on natural images while addressing real-world clinical challenges such as missing data and limited annotations.

MCML Authors

Contrastive Anatomy-Contrast Disentanglement: A Domain-General MRI Harmonization Method.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Magnetic resonance imaging (MRI) is an invaluable tool for clinical and research applications. Yet, variations in scanners and acquisition parameters cause inconsistencies in image contrast, hindering data comparability and reproducibility across datasets and clinical studies. Existing scanner harmonization methods, designed to address this challenge, face limitations, such as requiring traveling subjects or struggling to generalize to unseen domains. We propose a novel approach using a conditioned diffusion autoencoder with a contrastive loss and domain-agnostic contrast augmentation to harmonize MR images across scanners while preserving subject-specific anatomy. Our method enables brain MRI synthesis from a single reference image. It outperforms baseline techniques, achieving a +7% PSNR improvement on a traveling subjects dataset and +18% improvement on age regression in unseen. Our model provides robust, effective harmonization of brain MRIs to target scanners without requiring fine-tuning. This advancement promises to enhance comparability, reproducibility, and generalizability in multi-site and longitudinal clinical studies, ultimately contributing to improved healthcare outcomes.

MCML Authors

Global and Local Contrastive Learning for Joint Representations from Cardiac MRI and ECG.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI GitHub

Abstract

An electrocardiogram (ECG) is a widely used, cost-effective tool for detecting electrical abnormalities in the heart. However, it cannot directly measure functional parameters, such as ventricular volumes and ejection fraction, which are crucial for assessing cardiac function. Cardiac magnetic resonance (CMR) is the gold standard for these measurements, providing detailed structural and functional insights, but is expensive and less accessible. To bridge this gap, we propose PTACL (Patient and Temporal Alignment Contrastive Learning), a multimodal contrastive learning framework that enhances ECG representations by integrating spatio-temporal information from CMR. PTACL uses global patient-level contrastive loss and local temporal-level contrastive loss. The global loss aligns patient-level representations by pulling ECG and CMR embeddings from the same patient closer together, while pushing apart embeddings from different patients. Local loss enforces fine-grained temporal alignment within each patient by contrasting encoded ECG segments with corresponding encoded CMR frames. This approach enriches ECG representations with diagnostic information beyond electrical activity and transfers more insights between modalities than global alignment alone, all without introducing new learnable weights. We evaluate PTACL on paired ECG-CMR data from 27,951 subjects in the UK Biobank. Compared to baseline approaches, PTACL achieves better performance in two clinically relevant tasks: (1) retrieving patients with similar cardiac phenotypes and (2) predicting CMR-derived cardiac function parameters, such as ventricular volumes and ejection fraction. Our results highlight the potential of PTACL to enhance non-invasive cardiac diagnostics using ECG.

MCML Authors

Intelligent Virtual Sonographer (IVS): Enhancing Physician-Robot-Patient Communication.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

The advancement and maturity of large language models (LLMs) and robotics have unlocked vast potential for human-computer interaction, particularly in the field of robotic ultrasound. While existing research primarily focuses on either patient-robot or physician-robot interaction, the role of an intelligent virtual sonographer (IVS) bridging physician-robot-patient communication remains underexplored. This work introduces a conversational virtual agent in Extended Reality (XR) that facilitates real-time interaction between physicians, a robotic ultrasound system(RUS), and patients. The IVS agent communicates with physicians in a professional manner while offering empathetic explanations and reassurance to patients. Furthermore, it actively controls the RUS by executing physician commands and transparently relays these actions to the patient. By integrating LLM-powered dialogue with speech-to-text, text-to-speech, and robotic control, our system enhances the efficiency, clarity, and accessibility of robotic ultrasound acquisition. This work constitutes a first step toward understanding how IVS can bridge communication gaps in physician-robot-patient interaction, providing more control and therefore trust into physician-robot interaction while improving patient experience and acceptance of robotic ultrasound.

MCML Authors

A Holistic Time-Aware Classification Model for Multimodal Longitudinal Patient Data.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI GitHub

Abstract

Current prognostic and diagnostic AI models for healthcare often limit informational input capacity by being time-agnostic and focusing on single modalities, therefore lacking the holistic perspective clinicians rely on. To address this, we introduce a Time-Aware Multi Modal Transformer Encoder (TAMME) for longitudinal medical data. Unlike most state-of-the-art models, TAMME integrates longitudinal imaging, textual, numerical, and categorical data together with temporal information. Each element is represented as the sum of embeddings for high-level categorical type, further specification of this type, time-related data, and value. This composition overcomes limitations of a closed input vocabulary, enabling generalization to novel data. Additionally, with temporal context including the delta to the preceding element, we eliminate the requirement for evenly sampled input sequences. For long-term EHRs, the model employs a novel summarization mechanism that processes sequences piecewise and prepends recent data with history representations in end-to-end training. This enables balancing recent information with historical signals via self-attention. We demonstrate TAMME’s capabilities using data from 431k+ hospital stays, 73k ICU stays, and 425k Emergency Department (ED) visits from the MIMIC dataset for clinical classification tasks: prediction of triage acuity, length of stay, and readmission. We show superior performance over state-of-the-art approaches especially gained from long-term data. Overall, our approach provides versatile processing of entire patient trajectories as a whole to enhance predictive performance on clinical tasks.

MCML Authors

Influence of Classification Task and Distribution Shift Type on OOD Detection in Fetal Ultrasound.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI GitHub

Abstract

Reliable out-of-distribution (OOD) detection is important for safe deployment of deep learning models in fetal ultrasound amidst heterogeneous image characteristics and clinical settings. OOD detection relies on estimating a classification model’s uncertainty, which should increase for OOD samples. While existing research has largely focused on uncertainty quantification methods, this work investigates the impact of the classification task itself. Through experiments with eight uncertainty quantification methods across four classification tasks on the same image dataset, we demonstrate that OOD detection performance significantly varies with the task, and that the best task depends on the defined ID-OOD criteria; specifically, whether the OOD sample is dueto: i) an image characteristic shift or ii) an anatomical feature shift. Furthermore, we reveal that superior OOD detection does not guarantee optimal abstained prediction, underscoring the necessity to align task selection and uncertainty strategies with the specific downstream application in medical image analysis.

MCML Authors

UltrON: Ultrasound Occupancy Networks.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI GitHub

Abstract

In free-hand ultrasound imaging, sonographers rely on expertise to mentally integrate partial 2D views into 3D anatomical shapes. Shape reconstruction can assist clinicians in this process. Central to this task is the choice of shape representation, as it determines how accurately and efficiently the structure can be visualized, analyzed, and interpreted. Implicit representations, such as SDF and occupancy function, offer a powerful alternative to traditional voxel- or mesh-based methods by modeling continuous, smooth surfaces with compact storage, avoiding explicit discretization. Recent studies demonstrate that SDF can be effectively optimized using annotations derived from segmented B-mode ultrasound images. Yet, these approaches hinge on precise annotations, overlooking the rich acoustic information embedded in B-mode intensity. Moreover, implicit representation approaches struggle with the ultrasound’s view-dependent nature and acoustic shadowing artifacts, which impair reconstruction. To address the problems resulting from occlusions and annotation dependency, we propose an occupancy-based representation and introduce gls{UltrON} that leverages acoustic features to improve geometric consistency in weakly-supervised optimization regime. We show that these features can be obtained from B-mode images without additional annotation cost. Moreover, we propose a novel loss function that compensates for view-dependency in the B-mode images and facilitates occupancy optimization from multiview ultrasound. By incorporating acoustic properties, gls{UltrON} generalizes to shapes of the same anatomy. We show that gls{UltrON} mitigates the limitations of occlusions and sparse labeling and paves the way for more accurate 3D reconstruction.

MCML Authors

NeRF-based CBCT Reconstruction needs Normalization and Initialization.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Cone Beam Computed Tomography (CBCT) is widely used in medical imaging. However, the limited number and intensity of X-ray projections make reconstruction an ill-posed problem with severe artifacts. NeRF-based methods have achieved great success in this task. However, they suffer from a local-global training mismatch between their two key components: the hash encoder and the neural network. Specifically, in each training step, only a subset of the hash encoder’s parameters is used (local sparse), whereas all parameters in the neural network participate (global dense). Consequently, hash features generated in each step are highly misaligned, as they come from different subsets of the hash encoder. These misalignments from different training steps are then fed into the neural network, causing repeated inconsistent global updates in training, which leads to unstable training, slower convergence, and degraded reconstruction quality. Aiming to alleviate the impact of this local-global optimization mismatch, we introduce a Normalized Hash Encoder, which enhances feature consistency and mitigates the mismatch. Additionally, we propose a Mapping Consistency Initialization(MCI) strategy that initializes the neural network before training by leveraging the global mapping property from a well-trained model. The initialized neural network exhibits improved stability during early training, enabling faster convergence and enhanced reconstruction performance. Our method is simple yet effective, requiring only a few lines of code while substantially improving training efficiency on 128 CT cases collected from 4 different datasets, covering 7 distinct anatomical regions.

MCML Authors

DeepAf: One-Shot Spatiospectral Auto-Focus Model for Digital Pathology.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

While Whole Slide Imaging (WSI) scanners remain the gold standard for digitizing pathology samples, their high cost limits accessibility in many healthcare settings. Other low-cost solutions also face critical limitations: automated microscopes struggle with consistent focus across varying tissue morphology, traditional auto-focus methods require time-consuming focal stacks, and existing deep-learning approaches either need multiple input images or lack generalization capability across tissue types and staining protocols. We introduce a novel automated microscopic system powered by DeepAf, a novel auto-focus framework that uniquely combines spatial and spectral features through a hybrid architecture for single-shot focus prediction. The proposed network automatically regresses the distance to the optimal focal point using the extracted spatiospectral features and adjusts the control parameters for optimal image outcomes. Our system transforms conventional microscopes into efficient slide scanners, reducing focusing time by 80% compared to stack-based methods while achieving focus accuracy of 0.18 μm on same-lab samples—matching the performance of dual-image methods (0.19μm) with half the input requirements. DeepAf demonstrates robust cross-lab generalization with only 0.72% false focus predictions and 90% of predictions within the depth of field. Through an extensive clinical study of 536 brain tissue samples, our system achieves 0.90 AUC in cancer classification at 4× magnification, a significant achievement at lower magnification than typical 20× WSI scans. This results in a comprehensive hardware-software design enabling accessible, real-time digital pathology in resource-constrained settings while maintaining diagnostic accuracy.

MCML Authors

Temporal Differential Fields for 4D Motion Modeling via Image-to-Video Synthesis.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Temporal modeling on regular respiration-induced motions is crucial to image-guided clinical applications. Existing methods cannot simulate temporal motions unless high-dose imaging scans including starting and ending frames exist simultaneously. However, in the preoperative data acquisition stage, the slight movement of patients may result in dynamic backgrounds between the first and last frames in a respiratory period. This additional deviation can hardly be removed by image registration, thus affecting the temporal modeling. To address that limitation, we pioneeringly simulate the regular motion process via the image-to-video (I2V) synthesis framework, which animates with the first frame to forecast future frames of a given length. Besides, to promote the temporal consistency of animated videos, we devise the Temporal Differential Diffusion Model to generate temporal differential fields, which measure the relative differential representations between adjacent frames. The prompt attention layer is devised for fine-grained differential fields, and the field augmented layer is adopted to better interact these fields with the I2V framework, promoting more accurate temporal variation of synthesized videos. Extensive results on ACDC cardiac and 4D Lung datasets reveal that our approach simulates 4D videos along the intrinsic motion trajectory, rivaling other competitive methods on perceptual similarity and temporal consistency.

MCML Authors

Recognizing Surgical Phases Anywhere: Few-Shot Test-time Adaptation and Task-graph Guided Refinement.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI URL

Abstract

The complexity and diversity of surgical workflows, driven by heterogeneous operating room settings, institutional protocols, and anatomical variability, present a significant challenge in developing generalizable models for cross-institutional and cross-procedural surgical understanding. While recent surgical foundation models pretrained on large-scale vision-language data offer promising transferability, their zero-shot performance remains constrained by domain shifts, limiting their utility in unseen surgical environments. To address this, we introduce Surgical Phase Anywhere (SPA), a lightweight framework for versatile surgical workflow understanding that adapts foundation models to institutional settings with minimal annotation. SPA leverages few-shot spatial adaptation to align multi-modal embeddings with institution-specific surgical scenes and phases. It also ensures temporal consistency through diffusion modeling, which encodes task-graph priors derived from institutional procedure protocols. Finally, SPA employs dynamic test-time adaptation, exploiting the mutual agreement between multi-modal phase prediction streams to adapt the model to a given test video in a self-supervised manner, enhancing the reliability under test-time distribution shifts. SPA is a lightweight adaptation framework, allowing hospitals to rapidly customize phase recognition models by defining phases in natural language text, annotating a few images with the phase labels, and providing a task graph defining phase transitions. The experimental results show that the SPA framework achieves state-of-the-art performance in few-shot surgical phase recognition across multiple institutions and procedures, even outperforming full-shot models with 32-shot labeled data.

Semantic-Aware Chest X-ray Report Generation with Domain-Specific Lexicon and Diversity-Controlled Retrieval.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI GitHub

Abstract

Image-to-text radiology report generation aims to produce comprehensive diagnostic reports by leveraging both X-ray images and historical textual data. Existing retrieval-based methods focus on maximizing similarity scores, leading to redundant content and limited diversity in generated reports. Additionally, they lack sensitivity to medical domain-specific information, failing to emphasize critical anatomical structures and disease characteristics essential for accurate diagnosis. To address these limitations, we propose a novel retrieval-augmented framework that integrates exemplar radiology reports with X-ray images to enhance report generation. First, we introduce a diversity-controlled retrieval strategy to improve information diversity and reduce redundancy, ensuring broader clinical knowledge coverage. Second, we develop a comprehensive medical lexicon covering chest anatomy, diseases, radiological descriptors, treatments, and related concepts. This lexicon is integrated into a weighted cross-entropy loss function to improve the model’s sensitivity to critical medical terms. Third, we introduce a sentence-level semantic loss to enhance clinical semantic accuracy. Evaluated on the MIMIC-CXR dataset,our method achieves superior performance on clinical consistency metrics and competitive results on linguistic quality metrics, demonstrating its effectiveness in enhancing report accuracy and clinical relevance.

MCML Authors

UltraAD: Fine-Grained Ultrasound Anomaly Classification via Few-Shot CLIP Adaptation.

MICCAI 2025 - 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Precise anomaly detection in medical images is critical for clinical decision-making. While recent unsupervised or semi-supervised anomaly detection methods trained on large-scale normal data show promising results, they lack fine-grained differentiation, such as benign vs. malignant tumors. Additionally, ultrasound (US) imaging is highly sensitive to devices and acquisition parameter variations, creating significant domain gaps in the resulting US images. To address these challenges, we propose UltraAD, a vision-language model (VLM)-based approach that leverages few-shot US examples for generalized anomaly localization and fine-grained classification. To enhance localization performance, the image-level token of query visual prototypes is first fused with learnable text embeddings. This image-informed prompt feature is then further integrated with patch-level tokens, refining local representations for improved accuracy. For fine-grained classification, a memory bank is constructed from few-shot image samples and corresponding text descriptions that capture anatomical and abnormality-specific features. During training, the stored text embeddings remain frozen, while image features are adapted to better align with medical data. UltraAD has been extensively evaluated on three breast US datasets, outperforming state-of-the-art methods in both lesion localization and fine-grained medical classification. The code will be released upon acceptance.

Workshops (14 papers)

Reconstruct or Generate: Exploring the Spectrum of Generative Modeling for Cardiac MRI.

DGM4 @MICCAI 2025 - 5th Deep Generative Models Workshop at 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

In medical imaging, generative models are increasingly relied upon for two distinct but equally critical tasks: reconstruction, where the goal is to restore medical imaging (usually inverse problems like inpainting or superresolution), and generation, where synthetic data is created to augment datasets or carry out counterfactual analysis. Despite shared architecture and learning frameworks, they prioritize different goals: generation seeks high perceptual quality and diversity, while reconstruction focuses on data fidelity and faithfulness. In this work, we introduce a ‘generative model zoo’ and systematically analyze how modern latent diffusion models and autoregressive models navigate the reconstruction-generation spectrum. We benchmark a suite of generative models across representative cardiac medical imaging tasks, focusing on image inpainting with varying masking ratios and sampling strategies, as well as unconditional image generation. Our findings show that diffusion models offer superior perceptual quality for unconditional generation but tend to hallucinate as masking ratios increase, whereas autoregressive models maintain stable perceptual performance across masking levels, albeit with generally lower fidelity.

MCML Authors

Physics-Informed Joint Multi-TE Super-Resolution with Implicit Neural Representation for Robust Fetal T2 Mapping.

PIPPI @MICCAI 2025 - 10th Workshop in Perinatal, Preterm and Paediatric Image Analysis at the 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

T2 mapping in fetal brain MRI has the potential to improve characterization of the developing brain, especially at mid-field (0.55T), where T2 decay is slower. However, this is challenging as fetal MRI acquisition relies on multiple motion-corrupted stacks of thick slices, requiring slice-to-volume reconstruction (SVR) to estimate a high-resolution (HR) 3D volume. Currently, T2 mapping involves repeated acquisitions of these stacks at each echo time (TE), leading to long scan times and high sensitivity to motion. We tackle this challenge with a method that jointly reconstructs data across TEs, addressing severe motion. Our approach combines implicit neural representations with a physics-informed regularization that models T2 decay, enabling information sharing across TEs while preserving anatomical and quantitative T2 fidelity. We demonstrate state-of-the-art performance on simulated fetal brain and in vivo adult datasets with fetal-like motion. We also present the first in vivo fetal T2 mapping results at 0.55T. Our study shows potential for reducing the number of stacks per TE in T2 mapping by leveraging anatomical redundancy.

MCML Authors

US-X Complete: A Multi-Modal Approach to Anatomical 3D Shape Recovery.

ShapeMI @MICCAI 2025 - Workshop on Shape in Medical Imaging at the 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. To be published.

Abstract

Ultrasound offers a radiation-free, cost-effective solution for real-time visualization of spinal landmarks, paraspinal soft tissues and neurovascular structures, making it valuable for intraoperative guidance during spinal procedures. However, ultrasound suffers from inherent limitations in visualizing complete vertebral anatomy, in particular vertebral bodies, due to acoustic shadowing effects caused by bone. In this work, we present a novel multi-modal deep learning method for completing occluded anatomical structures in 3D ultrasound by leveraging complementary information from a single X-ray image. To enable training, we generate paired training data consisting of: (1) 2D lateral vertebral views that simulate X-ray scans, and (2) 3D partial vertebrae representations that mimic the limited visibility and occlusions encountered during ultrasound spine imaging. Our method integrates morphological information from both imaging modalities and demonstrates significant improvements in vertebral reconstruction (p < 0.001) compared to state of art in 3D ultrasound vertebral completion. We perform phantom studies as an initial step to future clinical translation, and achieve a more accurate, complete volumetric lumbar spine visualization overlayed on the ultrasound scan without the need for registration with preoperative modalities such as computed tomography. This demonstrates that integrating a single X-ray projection mitigates ultrasound’s key limitation while preserving its strengths as the primary imaging modality. Code and data will be made available upon acceptance.

MCML Authors

Redefining spectral unmixing for in-vivo brain tissue analysis from hyperspectral imaging.

CMMCA @MICCAI 2025 - Workshop on Computational Mathematics Modeling in Cancer Analysis at 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

In this paper, we propose a methodology for extracting molecular tumor biomarkers from hyperspectral imaging (HSI), an emerging technology for intraoperative tissue assessment. To achieve this, we employ spectral unmixing, allowing to decompose the spectral signals recorded by the HSI camera into their constituent molecular components. Traditional unmixing approaches are based on physical models that establish a relationship between tissue molecules and the recorded spectra. However, these methods commonly assume a linear relationship between the spectra and molecular content, which does not capture the whole complexity of light-matter interaction. To address this limitation, we introduce a novel unmixing procedure that allows to take into account non-linear optical effects while preserving the computational benefits of linear spectral unmixing. We validate our methodology on an in-vivo brain tissue HSI dataset and demonstrate that the extracted molecular information leads to superior classification performance.

MCML Authors

Tubular Anatomy-Aware 3D Semantically Conditioned Image Synthesis.

DGM4 @MICCAI 2025 - 5th Deep Generative Models Workshop at 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI GitHub

Abstract

Deep generative models have shown promising potential in the medical field by providing synthetic data to help address data scarcity caused by privacy concerns or high annotation costs. Anatomy-conforming images can be synthesized using semantically conditioned image synthesis models. Recent state-of-the-art models perform the synthesis process in a compressed latent space to enable the generation of high-resolution 3D images. However, synthesizing fine-grained tubular structures such as vessels remains a significant challenge. In this paper, we propose a 3D latent generative model with semantic and tubular-aware conditioning. Our tubular-aware conditioning module leverages a custom cross-attention-based vessel encoding scheme to incorporate fine-grained structural information. We assess its performance on 3D coronary CTA images. Experimental evaluation demonstrates its superiority over conventional conditioning methods regarding the preservation of vessel structures. These results highlight the potential of our method and suggest that more advanced conditioning strategies, such as explicit modeling of tubular-structure-specific anomalies or fine details, could be explored in future work.

MCML Authors

A Study in Scatter: Investigating Low-Contrast Image Contents Outside the X-Ray Collimation.

MSB EMERGE @MICCAI 2025 - 2nd MICCAI Student Board Emerge Workshop at the 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. To be published. Preprint available. URL

Abstract

Fluoroscopy is a widely used modality that provides vision to surgeons in minimally invasive surgery, but inherently raises concerns about radiation exposure. Collimation is a technique to reduce exposure by narrowing the radiation to a smaller area, with the trade-off of field-of-view limitations. However, the constraint the collimator shutters form for the x-ray beam is not absolute. Due to the non-ideal properties of the x-ray imaging and collimation process, small amounts of radiation are detectable outside the collimated area. This is a source of additional information, freely available as a byproduct of the imaging process, yet currently left unregarded. We explore whether this information can be used to provide additional knowledge about the surgical scene. In particular, we investigate whether it can be used to detect and visualize anatomical landmarks and surgical devices outside of the collimated area. We discuss the origins of this phenomenon, and perform experiments to evaluate its properties under different x-ray source parameters. Using anthropomorphic phantoms and a set of surgical guidewires, we investigate how well and under which conditions different landmarks and devices can be visualized with the proposed concept. We hope this work can open a path to provide additional information to interventional radiologists, while making use of every bit of radiation the patient is exposed to.

MCML Authors

GroundingDINO for Open-Set Lesion Detection in Medical Imaging.

MSB EMERGE @MICCAI 2025 - 2nd MICCAI Student Board Emerge Workshop at the 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. To be published. Preprint available. URL

Abstract

Open-world anomaly detection is a task in which machine learning is well-positioned to advance cancer diagnosis, potentially leading to significantly improved survival rates. For a model to be used in clinical settings, it must demonstrate high performance, robustness, and generalisability. A common approach to achieving high generalisability is to incorporate information from broader representations within the model. In this work, we investigate the application of GroundingDINO to medical anomaly detection and localisation, evaluating both its overall performance and the influence of text prompts. We find that GroundingDINO outperforms the YOLOv11n model even with minimal use of contextual information. When exploring methods to introduce more contextual information, we observe that specifying the organ within the prompt improves closed-set performance on rarer lesion classes. However, adding visual descriptions of lesions during training leads to a significant performance drop on those subsets, indicating that the model memorises prompt-image pairs rather than learning meaningful semantic relationships. Our work highlights a critical limitation of GroundingDINO in medical imaging and proposes targeted modifications to the model architecture or training strategies as promising directions for utilising richer semantic prompts to improve anomaly detection.

MCML Authors

Evaluation of Deformable Image Registration Under Alignment-Regularity Trade-Off.

BRIDGE @MICCAI 2025 - Workshop on Bridging Regulatory Science and Medical AI at 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI GitHub

Abstract

Evaluating deformable image registration (DIR) is challenging due to the inherent trade-off between achieving high alignment accuracy and maintaining deformation regularity. However, most existing DIR works either address this trade-off inadequately or overlook it altogether. In this paper, we highlight the issues with existing practices and propose an evaluation scheme that captures the trade-off continuously to holistically evaluate DIR methods. We first introduce the alignment-regularity characteristic (ARC) curves, which describe the performance of a given registration method as a spectrum under various degrees of regularity. We demonstrate that the ARC curves reveal unique insights that are not evident from existing evaluation practices, using experiments on representative deep learning DIR methods with various network architectures and transformation models. We further adopt a HyperNetwork-based approach that learns to continuously interpolate across the full regularization range, accelerating the construction and improving the sample density of ARC curves. Finally, we provide general guidelines for a nuanced model evaluation and selection using our evaluation scheme for both practitioners and registration researchers.

MCML Authors

GReAT: leveraging geometric artery data to improve wall shear stress assessment.

ShapeMI @MICCAI 2025 - Workshop on Shape in Medical Imaging at the 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. To be published. Preprint available. arXiv

Abstract

Leveraging big data for patient care is promising in many medical fields such as cardiovascular health. For example, hemodynamic biomarkers like wall shear stress could be assessed from patient-specific medical images via machine learning algorithms, bypassing the need for time-intensive computational fluid simulation. However, it is extremely challenging to amass large-enough datasets to effectively train such models. We could address this data scarcity by means of self-supervised pre-training and foundations models given large datasets of geometric artery models. In the context of coronary arteries, leveraging learned representations to improve hemodynamic biomarker assessment has not yet been well studied. In this work, we address this gap by investigating whether a large dataset (8449 shapes) consisting of geometric models of 3D blood vessels can benefit wall shear stress assessment in coronary artery models from a small-scale clinical trial (49 patients). We create a self-supervised target for the 3D blood vessels by computing the heat kernel signature, a quantity obtained via Laplacian eigenvectors, which captures the very essence of the shapes. We show how geometric representations learned from this datasets can boost segmentation of coronary arteries into regions of low, mid and high (time-averaged) wall shear stress even when trained on limited data.

MCML Authors

Mitigating Biases in Surgical Operating Rooms with Geometry.

COLAS @MICCAI 2025 - Workshop on Collaborative Intelligence and Autonomy in Image-guided Surgery at the 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. To be published. Preprint available. arXiv

Abstract

Deep neural networks are prone to learning spurious correlations, exploiting dataset-specific artifacts rather than meaningful features for prediction. In surgical operating rooms (OR), these manifest through the standardization of smocks and gowns that obscure robust identifying landmarks, introducing model bias for tasks related to modeling OR personnel. Through gradient-based saliency analysis on two public OR datasets, we reveal that CNN models succumb to such shortcuts, fixating on incidental visual cues such as footwear beneath surgical gowns, distinctive eyewear, or other role-specific identifiers. Avoiding such biases is essential for the next generation of intelligent assistance systems in the OR, which should accurately recognize personalized workflow traits, such as surgical skill level or coordination with other staff members. We address this problem by encoding personnel as 3D point cloud sequences, disentangling identity-relevant shape and motion patterns from appearance-based confounders. Our experiments demonstrate that while RGB and geometric methods achieve comparable performance on datasets with apparent simulation artifacts, RGB models suffer a 12% accuracy drop in realistic clinical settings with decreased visual diversity due to standardizations. This performance gap confirms that geometric representations capture more meaningful biometric features, providing an avenue to developing robust methods of modeling humans in the OR.

MCML Authors

TrackOR: Towards Personalized Intelligent Operating Rooms Through Robust Tracking.

COLAS @MICCAI 2025 - Workshop on Collaborative Intelligence and Autonomy in Image-guided Surgery at the 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. To be published. Preprint available. arXiv

Abstract

Providing intelligent support to surgical teams is a key frontier in automated surgical scene understanding, with the long-term goal of improving patient outcomes. Developing personalized intelligence for all staff members requires maintaining a consistent state of who is located where for long surgical procedures, which still poses numerous computational challenges. We propose TrackOR, a framework for tackling long-term multi-person tracking and re-identification in the operating room. TrackOR uses 3D geometric signatures to achieve state-of-the-art online tracking performance (+11% Association Accuracy over the strongest baseline), while also enabling an effective offline recovery process to create analysis-ready trajectories. Our work shows that by leveraging 3D geometric information, persistent identity tracking becomes attainable, enabling a critical shift towards the more granular, staff-centric analyses required for personalized intelligent systems in the operating room. This new capability opens up various applications, including our proposed temporal pathway imprints that translate raw tracking data into actionable insights for improving team efficiency and safety and ultimately providing personalized support.

MCML Authors

A Lightweight Optimization Framework for Estimating 3D Brain Tumor Infiltration.

CMMCA @MICCAI 2025 - Workshop on Computational Mathematics Modeling in Cancer Analysis at 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Glioblastoma, the most aggressive primary brain tumor, poses a severe clinical challenge due to its diffuse microscopic infiltration, which remains largely undetected on standard MRI. As a result, current radiotherapy planning employs a uniform 15 mm margin around the resection cavity, failing to capture patient-specific tumor spread. Tumor growth modeling offers a promising approach to reveal this hidden infiltration. However, methods based on partial differential equations or physics-informed neural networks tend to be computationally intensive or overly constrained, limiting their clinical adaptability to individual patients. In this work, we propose a lightweight, rapid, and robust optimization framework that estimates the 3D tumor concentration by fitting it to MRI tumor segmentations while enforcing a smooth concentration landscape. This approach achieves superior tumor recurrence prediction on 192 brain tumor patients across two public datasets, outperforming state-of-the-art baselines while reducing runtime from 30 minutes to less than one minute. Furthermore, we demonstrate the framework’s versatility and adaptability by showing its ability to seamlessly integrate additional imaging modalities or physical constraints.

MCML Authors

From Fiber Tracts to Tumor Spread: Biophysical Modeling of Butterfly Glioma Growth Using Diffusion Tensor Imaging.

CDMRI @MICCAI 2025 - Workshop on Computational Diffusion MRI at 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. To be published. Preprint available. arXiv

Abstract

Butterfly tumors are a distinct class of gliomas that span the corpus callosum, producing a characteristic butterfly-shaped appearance on MRI. The distinctive growth pattern of these tumors highlights how white matter fibers and structural connectivity influence brain tumor cell migration. To investigate this relation, we applied biophysical tumor growth models to a large patient cohort, systematically comparing models that incorporate fiber tract information with those that do not. Our results demonstrate that including fiber orientation data significantly improves model accuracy, particularly for a subset of butterfly tumors. These findings highlight the critical role of white matter architecture in tumor spread and suggest that integrating fiber tract information can enhance the precision of radiotherapy target volume delineation.

MCML Authors

Automated Constraint-Aware X-ray View Planning for Vascular Interventions Using Preoperative CTA.

CLIP @MICCAI 2025 - 14th International Workshop on Clinical Image-Based Procedures at the 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. DOI

Abstract

Accurate intraoperative imaging is essential for successful endovascular aneurysm repair (EVAR), enabling navigation of complex vascular anatomies and precise device placement. Surgeons often acquire multiple angiographic views, but manual viewpoint selection can lead to repeated C-arm repositioning, increased radiation exposure, and prolonged procedures. While recent methods automate view planning using vascular geometry and pose estimation, they often assume unrestricted C-arm mobility and overlook device-specific spatial constraints. In this work, we propose a novel constraint-aware, automated multi-view planning framework that leverages preoperative CTA data to generate optimized X-ray views tailored to procedural and equipment limitations. Our method starts with vessel segmentation, centerline extraction, and vessel graph construction. A planning route is defined along the target centerline, from which discrete points are sampled as local region centers. For each center, we define a region of interest and solve a constrained optimization problem to determine the optimal viewing orientation. The objective function combines two criteria: vessel spread area, computed via the convex hull area of the projected centerline, and inter-region projection separation, which promotes spatially clear views by minimizing overlap. We validated our framework on an in-house preoperative CTA dataset from 27 patients. Both qualitative and quantitative results demonstrate improved region visibility, spatial separation, and continuity of optimal viewing poses along the vascular path.

MCML Authors

#research #top-tier-work #navab #rueckert #schnabel #schneider #schueffler #wachinger

Related

09.10.2025

Rethinking AI in Public Institutions - Balancing Prediction and Capacity

Unai Fischer Abaigar explores how AI can make public decisions fairer, smarter, and more effective.

08.10.2025

MCML-LAMARR Workshop at University of Bonn

MCML and Lamarr researchers met in Bonn to exchange ideas on NLP, LLM finetuning, and AI ethics.

08.10.2025

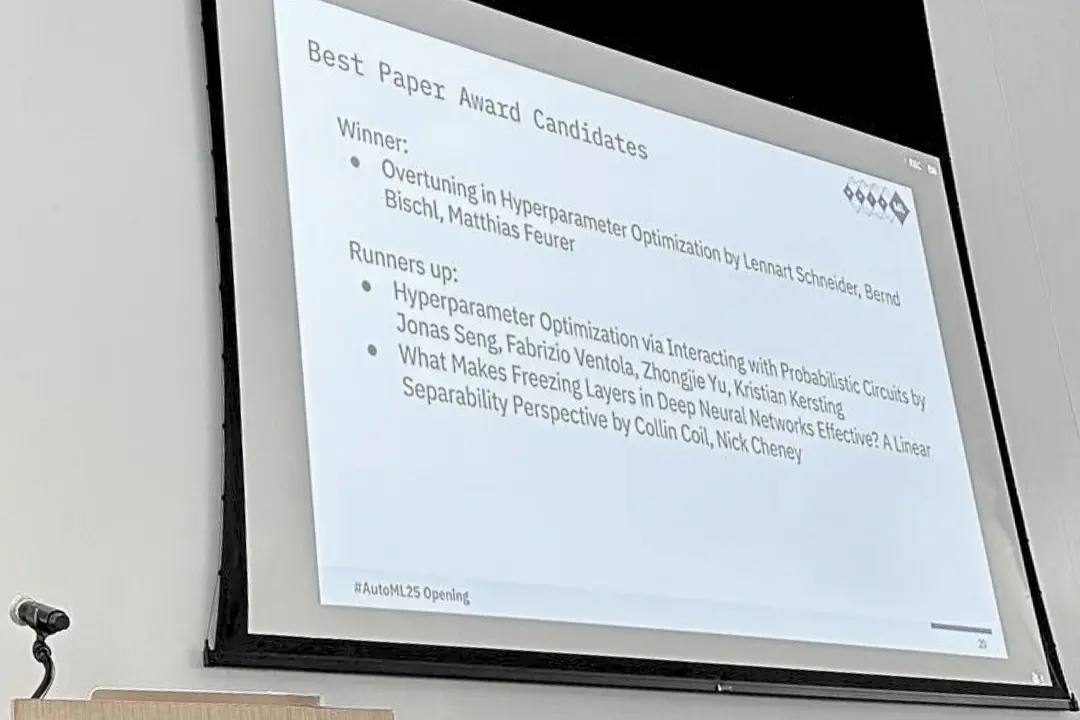

Three MCML Members Win Best Paper Award at AutoML 2025

MCML PI Matthias Feurer and Director Bernd Bischl’s paper on overtuning won Best Paper at AutoML 2025, offering insights for robust HPO.

29.09.2025

Machine Learning for Climate Action - With Researcher Kerstin Forster

Kerstin Forster researches how AI can cut emissions, boost renewable energy, and drive corporate sustainability.