02.05.2025

MCML at AISTATS 2025: Five Accepted Papers

28th International Conference on Artificial Intelligence and Statistics (AISTATS 2025). Mai Khao, Thailand, 29.04.2025–05.05.2025

We are happy to announce that MCML researchers have contributed a total of 5 papers to AISTATS 2025. Congrats to our researchers!

Main Track (5 papers)

Get rid of your constraints and reparametrize: A study in NNLS and implicit bias.

AISTATS 2025 - 28th International Conference on Artificial Intelligence and Statistics. Mai Khao, Thailand, May 03-05, 2025. URL

Abstract

Over the past years, there has been significant interest in understanding the implicit bias of gradient descent optimization and its connection to the generalization properties of overparametrized neural networks. Several works observed that when training linear diagonal networks on the square loss for regression tasks (which corresponds to overparametrized linear regression) gradient descent converges to special solutions, e.g., non-negative ones. We connect this observation to Riemannian optimization and view overparametrized GD with identical initialization as a Riemannian GD. We use this fact for solving non-negative least squares (NNLS), an important problem behind many techniques, e.g., non-negative matrix factorization. We show that gradient flow on the reparametrized objective converges globally to NNLS solutions, providing convergence rates also for its discretized counterpart. Unlike previous methods, we do not rely on the calculation of exponential maps or geodesics. We further show accelerated convergence using a second-order ODE, lending itself to accelerated descent methods. Finally, we establish the stability against negative perturbations and discuss generalization to other constrained optimization problems.

MCML Authors

Paths and Ambient Spaces in Neural Loss Landscapes.

AISTATS 2025 - 28th International Conference on Artificial Intelligence and Statistics. Mai Khao, Thailand, May 03-05, 2025. URL

Abstract

Understanding the structure of neural network loss surfaces, particularly the emergence of low-loss tunnels, is critical for advancing neural network theory and practice. In this paper, we propose a novel approach to directly embed loss tunnels into the loss landscape of neural networks. Exploring the properties of these loss tunnels offers new insights into their length and structure and sheds light on some common misconceptions. We then apply our approach to Bayesian neural networks, where we improve subspace inference by identifying pitfalls and proposing a more natural prior that better guides the sampling procedure.

MCML Authors

Incremental Uncertainty-aware Performance Monitoring with Active Labeling Intervention.

AISTATS 2025 - 28th International Conference on Artificial Intelligence and Statistics. Mai Khao, Thailand, May 03-05, 2025. URL

Abstract

We study the problem of monitoring machine learning models under gradual distribution shifts, where circumstances change slowly over time, often leading to unnoticed yet significant declines in accuracy. To address this, we propose Incremental Uncertainty-aware Performance Monitoring (IUPM), a novel label-free method that estimates performance changes by modeling gradual shifts using optimal transport. In addition, IUPM quantifies the uncertainty in the performance prediction and introduces an active labeling procedure to restore a reliable estimate under a limited labeling budget. Our experiments show that IUPM outperforms existing performance estimation baselines in various gradual shift scenarios and that its uncertainty awareness guides label acquisition more effectively compared to other strategies.

MCML Authors

Solving Estimating Equations With Copulas.

AISTATS 2025 - 28th International Conference on Artificial Intelligence and Statistics. Mai Khao, Thailand, May 03-05, 2025. DOI

Abstract

Thanks to their ability to capture complex dependence structures, copulas are frequently used to glue random variables into a joint model with arbitrary marginal distributions. More recently, they have been applied to solve statistical learning problems such as regression or classification. Framing such approaches as solutions of estimating equations, we generalize them in a unified framework. We can then obtain simultaneous, coherent inferences across multiple regression-like problems. We derive consistency, asymptotic normality, and validity of the bootstrap for corresponding estimators. The conditions allow for both continuous and discrete data as well as parametric, nonparametric, and semiparametric estimators of the copula and marginal distributions. The versatility of this methodology is illustrated by several theoretical examples, a simulation study, and an application to financial portfolio allocation. Supplementary materials for this article are available online.

MCML Authors

Additive Model Boosting: New Insights and Path(ologie)s.

AISTATS 2025 - 28th International Conference on Artificial Intelligence and Statistics. Mai Khao, Thailand, May 03-05, 2025. Oral Presentation. URL

Abstract

Additive models (AMs) have sparked a lot of interest in machine learning recently, allowing the incorporation of interpretable structures into a wide range of model classes. Many commonly used approaches to fit a wide variety of potentially complex additive models build on the idea of boosting additive models. While boosted additive models (BAMs) work well in practice, certain theoretical aspects are still poorly understood, including general convergence behavior and what optimization problem is being solved when accounting for the implicit regularizing nature of boosting. In this work, we study the solution paths of BAMs and establish connections with other approaches for certain classes of problems. Along these lines, we derive novel convergence results for BAMs, which yield crucial insights into the inner workings of the method. While our results generally provide reassuring theoretical evidence for the practical use of BAMs, they also uncover some ‘pathologies’ of boosting for certain additive model classes concerning their convergence behavior that require caution in practice. We empirically validate our theoretical findings through several numerical experiments.

MCML Authors

#research #top-tier-work #maly #ruegamer #tresp

Related

09.10.2025

Rethinking AI in Public Institutions - Balancing Prediction and Capacity

Unai Fischer Abaigar explores how AI can make public decisions fairer, smarter, and more effective.

08.10.2025

MCML-LAMARR Workshop at University of Bonn

MCML and Lamarr researchers met in Bonn to exchange ideas on NLP, LLM finetuning, and AI ethics.

08.10.2025

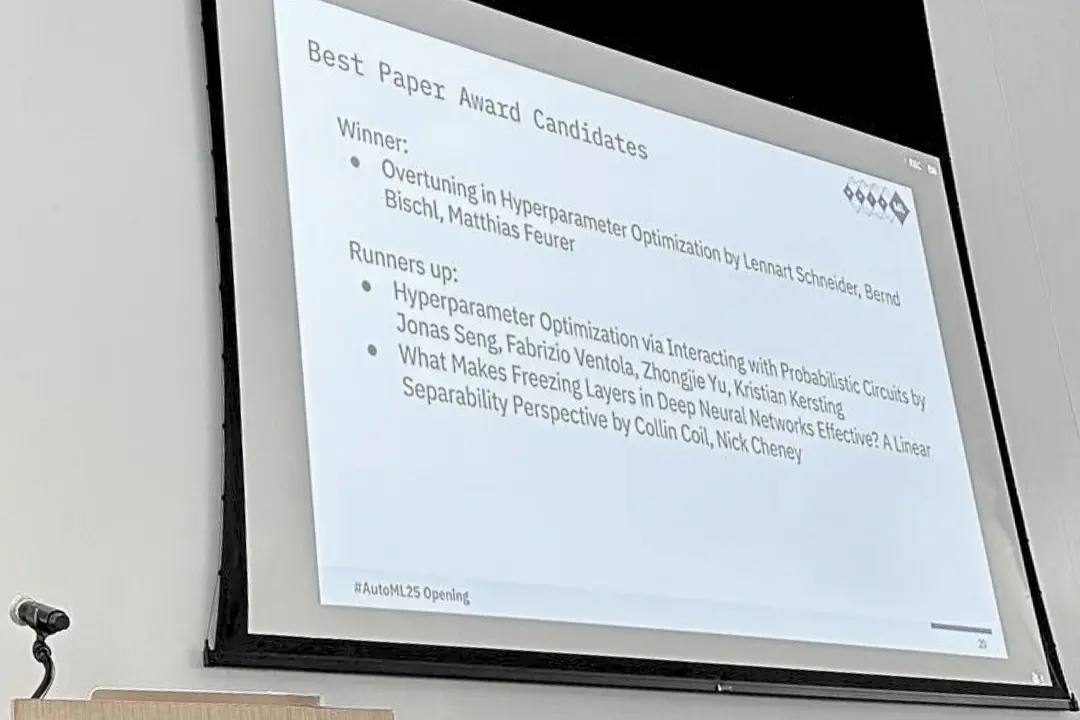

Three MCML Members Win Best Paper Award at AutoML 2025

Former MCML TBF Matthias Feurer and Director Bernd Bischl’s paper on overtuning won Best Paper at AutoML 2025, offering insights for robust HPO.

29.09.2025

Machine Learning for Climate Action - With Researcher Kerstin Forster

Kerstin Forster researches how AI can cut emissions, boost renewable energy, and drive corporate sustainability.