Research Group Sven Nyholm

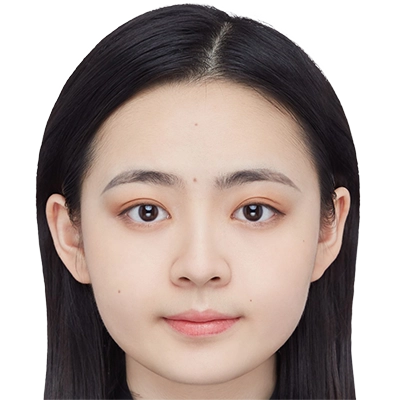

Sven Nyholm

is Professor of Ethics of Artificial Intelligence at LMU Munich.

His research and teaching encompass applied ethics (particularly, but not exclusively, ethics of artificial intelligence), practical philosophy, and philosophy of technology. Currently, he is working on his fourth book, which will be about the ethics of artificial intelligence. His previous books were concerned with Kantian ethics, the ethics of human-robot interactions and the ethics of technology.

Team members @MCML

PhD Students

Recent News @MCML

Publications @MCML

2026

[14]

S. Nyholm

The Ethics of Artificial Intelligence: A Philosophical Introduction.

Hackett Publishing Company. Mar. 2026. URL

The Ethics of Artificial Intelligence: A Philosophical Introduction.

Hackett Publishing Company. Mar. 2026. URL

[13]

S. Nyholm • A. Kasirzadeh • J. Zerilli

Contemporary Debates in the Ethics of Artificial Intelligence.

Contemporary Debates in Philosophy. Jan. 2026. URL

Contemporary Debates in the Ethics of Artificial Intelligence.

Contemporary Debates in Philosophy. Jan. 2026. URL

[12]

R. Hakli • S. Nyholm • M. Nørskov

Introduction: Culturally Sustainable Social Robotics?

Social Robots and Cultural Sustainability. Jan. 2026. DOI

Introduction: Culturally Sustainable Social Robotics?

Social Robots and Cultural Sustainability. Jan. 2026. DOI

[11]

S. Nyholm

Social Robots: Culturally Sustainable or Socially Disruptive? The Case of Humanoid Robots.

Social Robots and Cultural Sustainability. Jan. 2026. DOI

Social Robots: Culturally Sustainable or Socially Disruptive? The Case of Humanoid Robots.

Social Robots and Cultural Sustainability. Jan. 2026. DOI

2025

[10]

M. Kiener • J. Bozenhard • G. Kutyniok • S. Nyholm

The ethics of analog AI.

AI and Ethics 6.27. Dec. 2025. DOI

The ethics of analog AI.

AI and Ethics 6.27. Dec. 2025. DOI

[9]

D. Gong

Closing the responsibility gap: allocating responsibility according to prerequisite control and expectations for personal benefits.

Ethics and Information Technology 28.8. Dec. 2025. DOI

Closing the responsibility gap: allocating responsibility according to prerequisite control and expectations for personal benefits.

Ethics and Information Technology 28.8. Dec. 2025. DOI

[8]

S. Campell • P. Liu • S. Nyholm

Can Chatbots Preserve Our Relationships with the Dead?

Journal of the American Philosophical Association 11.2. Jun. 2025. DOI

Can Chatbots Preserve Our Relationships with the Dead?

Journal of the American Philosophical Association 11.2. Jun. 2025. DOI

[7]

C. Hurshman • C. Voinea • W. Constantinescu • F. Feroz • S. Hongladarom • P. Jurcys • A. Kozlovski • B. Lange • P. Liu • J. Menikoff • S. Nyholm • A. Puzio • P. Sweeney • B. G. Sharma • E. Schwitzgebel • C. Vica • D. E. Weissglass • A. Zahiu • J. Savulescu • W. Sinnott-Armstrong • S. P. Mann • B. D. Earp

Beyond Deepfakes: 'Digital duplicates' or AI Simulations of Real People-An International Ethical Consensus.

Preprint (Mar. 2025). DOI

Beyond Deepfakes: 'Digital duplicates' or AI Simulations of Real People-An International Ethical Consensus.

Preprint (Mar. 2025). DOI

[6]

B. D. Earp • S. P. Mann • M. Aboy • E. Awad • M. Betzler • M. Botes • R. Calcott • M. Caraccio • N. Chater • M. Coeckelbergh • M. Constantinescu • H. Dabbagh • K. Devlin • X. Ding • V. Dranseika • J. A. C. Everett • R. Fan • F. Feroz • K. B. Francis • C. Friedman • O. Friedrich • I. Gabriel • I. Hannikainen • J. Hellmann • A. K. Jahrome • N. S. Janardhanan • P. Jurcys • A. Kappes • M. A. Khan • G. Kraft-Todd • M. Kroner Dale • S. M. Laham • B. Lange • M. Leuenberger • J. Lewis • P. Liu • D. M. Lyreskog • M. Maas • J. McMillan • E. Mihailov • T. Minssen • J. Teperowski Monrad • K. Muyskens • S. Myers • S. Nyholm • A. M. Owen • A. Puzio • C. Register • M. G. Reinecke • A. Safron • H. Shevlin • H. Shimizu • P. V. Treit • C. Voinea • K. Yan • A. Zahiu • R. Zhang • H. Zohny • W. Sinnott-Armstrong • I. Singh • J. Savulescu • M. S. Clark

Relational Norms for Human-AI Cooperation.

Preprint (Feb. 2025). arXiv

Relational Norms for Human-AI Cooperation.

Preprint (Feb. 2025). arXiv

2024

[5]

S. Nyholm

Digital Duplicates and Personal Scarcity: Reply to Voinea et al and Lundgren.

Philosophy and Technology 37.132. Nov. 2024. DOI

Digital Duplicates and Personal Scarcity: Reply to Voinea et al and Lundgren.

Philosophy and Technology 37.132. Nov. 2024. DOI

[4]

S. Milano • S. Nyholm

Advanced AI assistants that act on our behalf may not be ethically or legally feasible.

Nature Machine Intelligence 6. Jul. 2024. DOI

Advanced AI assistants that act on our behalf may not be ethically or legally feasible.

Nature Machine Intelligence 6. Jul. 2024. DOI

2023

[3]

B. H. Lang • S. Nyholm • J. Blumenthal-Barby

Responsibility Gaps and Black Box Healthcare Ai: Shared Responsibilization as a Solution.

Digital Society 2.52. Nov. 2023. DOI

Responsibility Gaps and Black Box Healthcare Ai: Shared Responsibilization as a Solution.

Digital Society 2.52. Nov. 2023. DOI

[2]

J. Smids • H. Berkers • P. Le Blanc • S. Rispens • S. Nyholm

Employers Have a Duty of Beneficence to Design for Meaningful Work: A General Argument and Logistics Warehouses as a Case Study.

The Journal of Ethics. Oct. 2023. DOI

Employers Have a Duty of Beneficence to Design for Meaningful Work: A General Argument and Logistics Warehouses as a Case Study.

The Journal of Ethics. Oct. 2023. DOI

[1]

S. Nyholm

Is Academic Enhancement Possible by Means of Generative Ai-Based Digital Twins?

American Journal of Bioethics 23.10. Sep. 2023. DOI

Is Academic Enhancement Possible by Means of Generative Ai-Based Digital Twins?

American Journal of Bioethics 23.10. Sep. 2023. DOI

©all images: LMU | TUM