Research Group Michael Ingrisch

Michael Ingrisch

leads the group for Clinical Data Science at the Department of Radiology at LMU Munich.

His team employs advanced statistics, machine learning and computer vision techniques in the context of clinical radiology to enable fast and precise AI-supported diagnosis and prognostication. The research areas focus on applying computer vision techniques in radiology for diagnosis and prognosis, as well as using biostatistical methods to rigorously analyze clinical data. Additionally, the work includes leveraging large language models for clinical text analysis and developing multimodal deep learning models that integrate diverse data types, such as imaging and text, to improve AI model accuracy and applicability.

Team members @MCML

PostDocs

PhD Students

Recent News @MCML

Publications @MCML

2025

AutoPET Challenge on Fully Automated Lesion Segmentation in Oncologic PET/CT Imaging, Part 2: Domain Generalization.

Journal of Nuclear Medicine. Dec. 2025. DOI

Artificial intelligence for TNM staging in NSCLC: a critical appraisal of segmentation utility in [1⁸F]FDG PET/CT.

European Journal of Nuclear Medicine and Molecular Imaging. Nov. 2025. DOI

Fast machine learning image reconstruction of radially undersampled k-space data for low-latency real-time MRI.

PLOS One 20.11. Nov. 2025. DOI

3D deep learning-based muscle volume quantification from thoracic CT as a surrogate for DXA-Derived appendicular muscle mass in older adults.

Aging Clinical and Experimental Research 37.296. Oct. 2025. DOI

Multi-Task Deep Learning for Head and Neck Cancer: Segmentation, Survival Prediction, and HPV Classification in the HECKTOR 2025 Challenge.

HECKTOR @MICCAI 2025 - 4th Head and Neck Cancer Tumor Lesion Segmentation, Diagnosis and Prognosis Challenge at the 28th International Conference on Medical Image Computing and Computer Assisted Intervention. Daejeon, Republic of Korea, Sep 23-27, 2025. URL GitHub

The effect of medical explanations from large language models on diagnostic accuracy in radiology.

Preprint (Aug. 2025). DOI

Preventing Sensitive Information Leakage via Post-hoc Orthogonalization with Application to Chest Radiograph Embeddings.

PAKDD 2025 - 29th Pacific-Asia Conference on Knowledge Discovery and Data Mining. Sydney, Australia, Jun 10-13, 2025. DOI GitHub

PARADIM: A Platform to Support Research at the Interface of Data Science and Medical Imaging.

Journal of Imaging Informatics in Medicine. Jun. 2025. DOI

Radiomics-based differentiation of upper urinary tract urothelial and renal cell carcinoma in preoperative computed tomography datasets.

BMC Medical Imaging 25.196. May. 2025. DOI

Optimizing lower extremity CT angiography: A prospective study of individualized vs. fixed post-trigger delays in bolus tracking.

European Journal of Radiology 185.112009. Apr. 2025. DOI

The effect of medical explanations from large language models on diagnostic decisions in radiology.

Preprint (Mar. 2025). DOI

Breast cancer risk prediction using background parenchymal enhancement, radiomics, and symmetry features on MRI.

SPIE 2025 - SPIE Medical Imaging: Computer-Aided Diagnosis. San Diego, CA, USA, Feb 16-21, 2025. DOI

Pitfalls with anomaly detection for breast cancer risk prediction.

SPIE 2025 - SPIE Medical Imaging: Computer-Aided Diagnosis. San Diego, CA, USA, Feb 16-21, 2025. DOI

Replication study of PD-L1 status prediction in NSCLC using PET/CT radiomics.

European Journal of Radiology 183.111825. Feb. 2025. DOI

Effect of artificial intelligence-aided differentiation of adenomatous and non-adenomatous colorectal polyps at CT colonography on radiologists’ therapy management.

European Radiology 35.7. Jan. 2025. DOI

A probability model for estimating age in young individuals relative to key legal thresholds: 15, 18 or 21-year.

International Journal of Legal Medicine 139.1. Jan. 2025. DOI

Post-Training Network Compression for 3D Medical Image Segmentation: Reducing Computational Efforts via Tucker Decomposition.

Radiology: Artificial Intelligence 7.2. Jan. 2025. DOI

2024

Artificial intelligence–based rapid brain volumetry substantially improves differential diagnosis in dementia.

Alzheimer’s and Dementia 16.e70037. Oct. 2024. DOI

Software-assisted structured reporting and semi-automated TNM classification for NSCLC staging in a multicenter proof of concept study.

Insights into Imaging 15.258. Oct. 2024. DOI

Results from the autoPET challenge on fully automated lesion segmentation in oncologic PET/CT imaging.

Nature Machine Intelligence 6. Oct. 2024. DOI

Automatische ICD-10-Codierung.

Die Radiologie 64. Aug. 2024. DOI

MRI-based ventilation and perfusion imaging to predict radiation-induced pneumonitis in lung tumor patients at a 0.35T MR-Linac.

Radiotherapy and Oncology. Aug. 2024. DOI

Towards Aleatoric and Epistemic Uncertainty in Medical Image Classification.

AIME 2024 - 22nd International Conference on Artificial Intelligence in Medicine. Salt Lake City, UT, USA, Jul 09-12, 2024. DOI

Using Ventilation and Perfusion MRI at a 0.35 T MR-Linac to Predict Radiation-Induced Pneumonitis in Lung Cancer Patients.

ISMRM 2024 - International Society for Magnetic Resonance in Medicine Annual Meeting. Singapore, May 04-09, 2024. URL

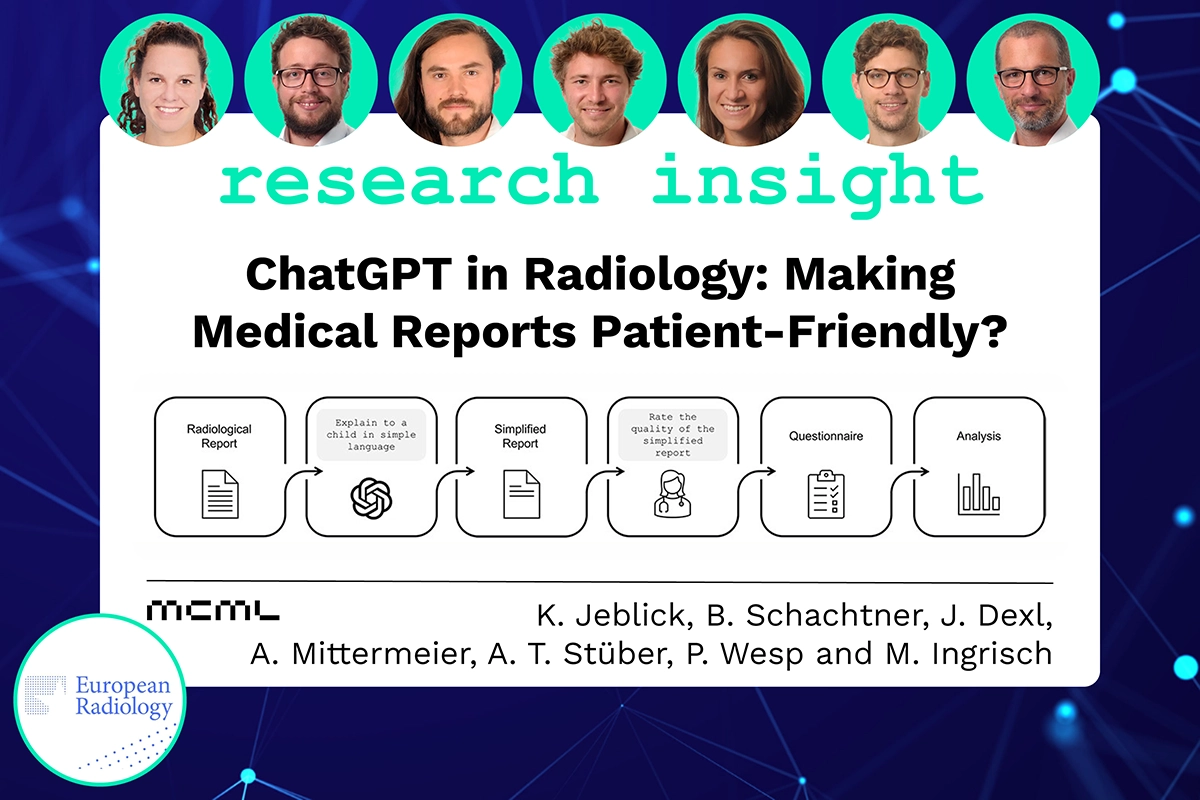

ChatGPT makes medicine easy to swallow: an exploratory case study on simplified radiology reports.

European Radiology 34. May. 2024. DOI

Application of machine learning in CT colonography and radiological age assessment: enhancing traditional diagnostics in radiology.

Dissertation LMU München. Mar. 2024. DOI

Computer vision-based guidance tool for correct radiographic hand positioning.

SPIE 2024 - SPIE Medical Imaging: Image Perception, Observer Performance, and Technology Assessment. San Diego, CA, USA, Feb 18-22, 2024. DOI

Deformable current-prior registration of DCE breast MR images on multi-site data.

SPIE 2024 - SPIE Medical Imaging: Image Processing. San Diego, CA, USA, Feb 18-22, 2024. DOI

Constrained Probabilistic Mask Learning for Task-specific Undersampled MRI Reconstruction.

WACV 2024 - IEEE/CVF Winter Conference on Applications of Computer Vision. Waikoloa, Hawaii, Jan 04-08, 2024. DOI

Radiological age assessment based on clavicle ossification in CT: enhanced accuracy through deep learning.

International Journal of Legal Medicine. Jan. 2024. DOI

2023

A comprehensive machine learning benchmark study for radiomics-based survival analysis of CT imaging data in patients with hepatic metastases of CRC.

Investigative Radiology 58.12. Dec. 2023. DOI

Post-hoc Orthogonalization for Mitigation of Protected Feature Bias in CXR Embeddings.

Preprint (Nov. 2023). arXiv

Revitalize the Potential of Radiomics: Interpretation and Feature Stability in Medical Imaging Analyses through Groupwise Feature Importance.

LB-D-DC 2023 @xAI 2023 - Late-breaking Work, Demos and Doctoral Consortium at the 1st World Conference on eXplainable Artificial Intelligence. Lisbon, Portugal, Jul 26-28, 2023. PDF

Cascaded Latent Diffusion Models for High-Resolution Chest X-ray Synthesis.

PAKDD 2023 - 27th Pacific-Asia Conference on Knowledge Discovery and Data Mining. Osaka, Japan, May 25-28, 2023. DOI

Robust evaluation of contrast-enhanced imaging for perfusion quantification.

Dissertation LMU München. May. 2023. DOI

2022

Implicit Embeddings via GAN Inversion for High Resolution Chest Radiographs.

MAD @MICCAI 2022 - 1st Workshop on Medical Applications with Disentanglements at the 25th International Conference on Medical Image Computing and Computer Assisted Intervention. Singapore, Sep 18-22, 2022. DOI

2021

Survival-oriented embeddings for improving accessibility to complex data structures.

Bridging the Gap: from ML Research to Clinical Practice @NeurIPS 2021 - Workshop on Bridging the Gap: from Machine Learning Research to Clinical Practice at the 35th Conference on Neural Information Processing Systems. Virtual, Dec 06-14, 2021. arXiv

Towards modelling hazard factors in unstructured data spaces using gradient-based latent interpolation.

Deep Generative Models and Downstream Applications @NeurIPS 2021 - Workshop on Deep Generative Models and Downstream Applications at the 35th Conference on Neural Information Processing Systems. Virtual, Dec 06-14, 2021. PDF

Bi-Centric Independent Validation of Outcome Prediction after Radioembolization of Primary and Secondary Liver Cancer.

Journal of Clinical Medicine 10.16. Aug. 2021. DOI

©all images: LMU | TUM