27.02.2025

ChatGPT in Radiology: Making Medical Reports Patient-Friendly?

MCML Research Insight - With Katharina Jeblick, Balthasar Schachtner, Jakob Dexl, Andreas Mittermeier, Anna Theresa Stüber and Philipp Wesp and MCML PI Michael Ingrisch

Medical reports, especially in radiology, are commonly difficult for patients to understand. Filled with complex terminology and specialized jargon, these reports are primarily written for medical professionals, often leaving patients struggling to make sense of their diagnoses. But what if AI could help? Recognizing this potential early on, the team of our PI Michael Ingrisch launched a study just four weeks after ChatGPT’s release to explore how it could simplify radiology reports to make them more accessible. The study has now also been published in European Radiology.

«ChatGPT, what does this medical report mean? Can you explain it to me like I’m five?»

Katharina Jeblick et al.

MCML Junior Members

The Study: ChatGPT as a Medical Translator

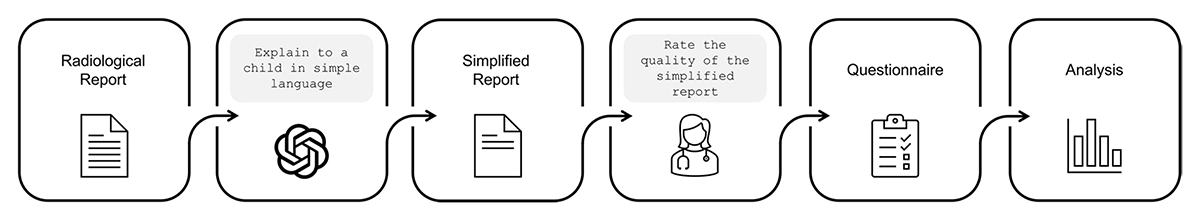

The research team, including MCML Junior members Katharina Jeblick, Balthasar Schachtner, Jakob Dexl, Andreas Mittermeier, Anna Theresa Stüber and Philipp Wesp and MCML PI Michael Ingrisch explored whether ChatGPT could effectively simplify radiology reports while preserving factual accuracy. They took three fictitious radiology reports and asked ChatGPT to rewrite them in accessible language, as if explaining them to a child. Then, 15 radiologists evaluated these simplified reports based on three key factors:

- Factual correctness – Did ChatGPT get the medical facts right?

- Completeness – Did it include all the relevant medical information?

- Potential harm – Could the simplified reports mislead patients or cause unnecessary worry?

©K. Jeblick et al.

The workflow of the exploratory case study

The Results: Promise With a Side of Caution

The radiologists found that, in most cases, ChatGPT-produced reports were factually correct, fairly complete, and unlikely to cause harm. This suggests that AI has the potential to make medical information more accessible. However, there were still notable issues:

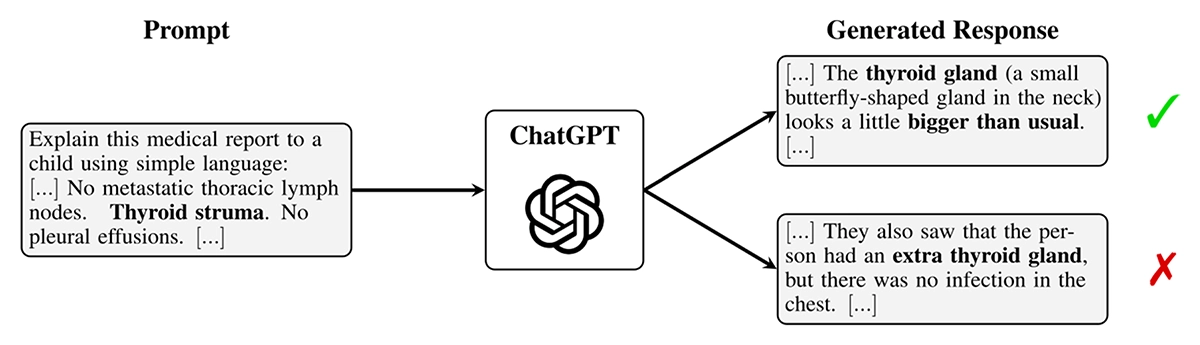

- Misinterpretation of medical terms – Some simplified reports changed the meaning of critical terms, leading to potential misunderstandings.

- Missing details – Important medical findings were sometimes omitted, which could mislead patients.

- AI “hallucinations” – ChatGPT occasionally added false or misleading information that wasn’t in the original report.

©K. Jeblick et al.

Example of hallucination: Even when responses sound plausible, the content does not need to be true, shown in the response on the bottom.

«While we see a need for further adaption to the medical field, the initial insights of this study indicate a tremendous potential in using LLMs like ChatGPT to improve patient-centered care in radiology and other medical domains.»

Katharina Jeblick et al.

MCML Junior Members

What’s Next?

The study highlights a huge opportunity for AI to bridge the gap between complex medical language and patient understanding. However, it also emphasizes the need for expert supervision. AI-generated reports should not replace human interpretation but rather serve as a tool for doctors to enhance patient communication.

With further improvements and medical oversight, AI-powered text simplification could revolutionize how patients engage with their health information—making medicine easier to “swallow” for everyone.

Read More

Interested in a closer look at this research? Read more in another blog post on the website of the European Society of Radiology (ESR): ChatGPT Makes Medicine Easy to Swallow.

Explore the full paper published in the renowned, peer-reviewed medical journal European Radiology:

ChatGPT makes medicine easy to swallow: an exploratory case study on simplified radiology reports.

European Radiology 34. May. 2024. DOI

Share Your Research!

Get in touch with us!

Are you an MCML Junior Member and interested in showcasing your research on our blog?

We’re happy to feature your work—get in touch with us to present your paper.

Related

19.02.2026

COSMOS – Teaching Vision-Language Models to Look Beyond the Obvious

Presented at CVPR 2025, COSMOS shows how smarter training helps VLMs learn from details and context, improving AI understanding without larger models.

05.02.2026

Needle in a Haystack: Finding Exact Moments in Long Videos

ECCV 2024 research introduces RGNet, an AI model that finds exact moments in long videos using unified retrieval and grounding.

04.02.2026

Benjamin Busam Leads Design of Bavarian Earth Observation Satellite Network “CuBy”

Benjamin Busam leads the scientific design of the “CuBy” satellite network, delivering AI-ready Earth observation data for Bavaria.