15.01.2025

TruthQuest – A New Benchmark for AI Reasoning

MCML Research Insight - With Philipp Mondorf and Barbara Plank

In their recent work, “Liar, Liar, Logical Mire: A Benchmark for Suppositional Reasoning in Large Language Models” our Junior Member Philipp Mondorf and our PI Barbara Plank tackle a fascinating question: How well do AI systems handle complex reasoning tasks?

«We introduce TruthQuest, a benchmark for suppositional reasoning based on the principles of knights and knaves puzzles.»

Philipp Mondorf

MCML Junior Member

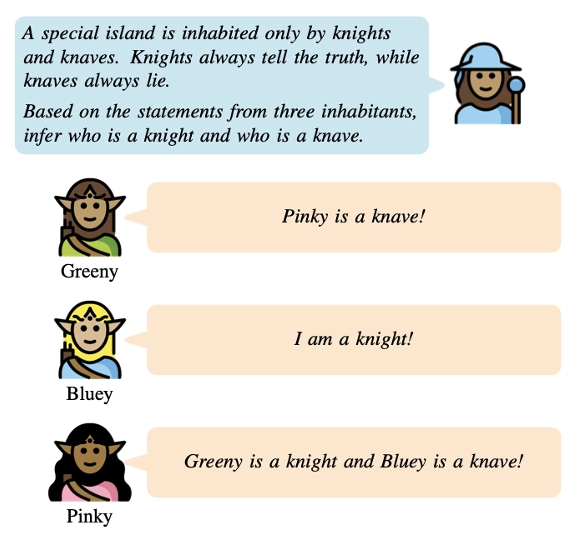

To answer this question the paper introduces TruthQuest, a benchmark designed to evaluate large language models (LLMs) using a classic logic puzzle framework: knights and knaves. In these puzzles, knights always tell the truth, while knaves always lie. The goal, and challenge, is to deduce the identity of each character based on their statements. Solving them requires a type of reasoning that goes beyond straightforward deduction. Instead, it demands the ability to explore hypothetical scenarios and infer truth or falsehood based on logical implications.

The authors rigorously tested prominent LLMs, including models from the Llama series (i.e., Llama 2 and Llama 3) and the Mixtral family, across puzzles of varying complexity. Notably, these puzzles included statements ranging from straightforward self-references to intricate logical equivalences and implications. The study reveals that even the most advanced models face significant challenges when reasoning through these puzzles, particularly as the complexity increases. Performance was measured not only in terms of accuracy but also through error analysis, uncovering patterns in how models reason, and fail. Errors ranged from basic misunderstandings about truth and lies to struggles with deducing the implications of potentially false statements. While some models showed promise with advanced techniques like chain-of-thought prompting, their accuracy dropped sharply with more characters and intricate logical relationships.

«Our benchmark presents problems of varying complexity, considering both the number of characters and the types of logical statements involved.»

Philipp Mondorf

MCML Junior Member

Under zero-shot conditions, where models must solve puzzles without prior example-based guidance, performance was close to the random guessing for most LLMs, even the larger ones. However, Llama 3-70B exhibited superior performance compared to others in its class, particularly when aided by advanced prompting techniques like chain-of-thought (CoT). This technique involves breaking down the reasoning process into explicit steps, helping the model tackle simpler puzzles with remarkable improvements in accuracy.

For puzzles involving more characters or complex logical structures, the models struggled universally. Errors multiplied when tasks required analyzing the implications of false statements or balancing multiple hypothetical scenarios. For instance, when dealing with puzzles featuring five or six characters, even Llama 3-70B, the top-performing model, saw a steep drop in accuracy.

©Philipp Mondorf & Barbara Plank

An instance of the knights & knaves puzzle. By reasoning about the characters’ statements and their truthfulness, it is possible to deduce that Greeny and Bluey must be knights, while Pinky is a knave.

Error analysis offered fascinating insights into the capabilities and limitations of current LLMs. Lower-performing models, such as Llama 2-7B, displayed a diverse range of reasoning flaws, from failing to recognize the basic distinction between truth and lies to misinterpreting logical operators. In contrast, higher-performing models like Llama 3-70B primarily struggled with deducing the implications of potentially false statements.

Why focus on such puzzles? Knights and knaves problems, despite their simplicity, expose fundamental capabilities, or limitations, of AI systems in reasoning through uncertainty and contradiction. These skills are crucial for real-world applications, from autonomous decision-making systems to conversational AI, where navigating ambiguity is a daily challenge.

«TruthQuest evaluates whether models can hypothesize, evaluate, and conclude: a more human-like reasoning approach.»

Philipp Mondorf

MCML Junior Member

Additionally, the benchmark illuminates the current state of AI reasoning in a novel way. Unlike many standard datasets that test pre-defined deductive steps, TruthQuest evaluates whether models can hypothesize, evaluate, and conclude: a more human-like reasoning approach.

The journey doesn’t end here. The authors suggest several exciting directions for future research:

- Expanding the benchmark to include puzzles with multiple valid solutions or no solution at all, adding another layer of complexity.

- Introducing new character types with unique truth-telling behaviors, such as “logicians” who always reason correctly or “politicians” who never do, to further challenge models.

- Developing even more sophisticated prompting techniques, such as Tree-of-Thoughts or Graph-of-Thoughts, to assist models in navigating complex reasoning scenarios.

Explore the full paper, published at EMNLP 2024 to see how prominent LLMs performed and where they fell short! The quest for AI that truly understands logic continues.

Liar, Liar, Logical Mire: A Benchmark for Suppositional Reasoning in Large Language Models.

EMNLP 2024 - Conference on Empirical Methods in Natural Language Processing. Miami, FL, USA, Nov 12-16, 2024. DOI

Share Your Research!

Get in touch with us!

Are you an MCML Junior Member and interested in showcasing your research on our blog?

We’re happy to feature your work—get in touch with us to present your paper.

Related

24.02.2026

Cosmology: Measuring the Expansion of the Universe With Cosmic Fireworks

Daniel Gruen leads LMU’s campaign on rare SN Winny to refine the Hubble constant and address the Hubble tension in cosmology.

19.02.2026

COSMOS – Teaching Vision-Language Models to Look Beyond the Obvious

Presented at CVPR 2025, COSMOS shows how smarter training helps VLMs learn from details and context, improving AI understanding without larger models.

05.02.2026

Daniel Rückert and Fabian Theis Awarded Google.org AI for Science Grant

Daniel Rueckert and Fabian Theis receive Google.org AI funding to develop multiscale AI models for biomedical disease simulation.