13.06.2025

Volker Tresp and His Team Win ReGenAI @CVPR 2025 Best Paper Award

Honored for Work on New Jailbreak Vulnerability in T2I Diffusion Models

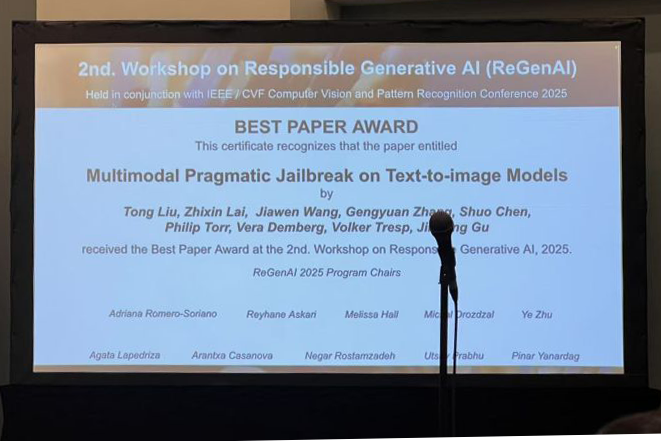

MCML Junior Members Tong Liu, Gengyuan Zhang, Shuo Chen, and MCML PI Volker Tresp and their co-authors have received the Best Paper Award at the Second Workshop on Responsible Generative AI (ReGenAI) Workshop at CVPR 2025 for their paper “Multimodal Pragmatic Jailbreak on Text-to-image Models”.

The authors show that text-to-image models can be easily exploited to produce unsafe content through cross‑modal interactions between safe text and images, a vulnerability that current safety filters fail to address effectively.

Congratulations from us!

Check out the full paper:

Multimodal Pragmatic Jailbreak on Text-to-image Models.

ACL 2025 - 63rd Annual Meeting of the Association for Computational Linguistics. Vienna, Austria, Jul 27-Aug 01, 2025. DOI GitHub

Related

24.02.2026

Cosmology: Measuring the Expansion of the Universe With Cosmic Fireworks

Daniel Gruen leads LMU’s campaign on rare SN Winny to refine the Hubble constant and address the Hubble tension in cosmology.

19.02.2026

COSMOS – Teaching Vision-Language Models to Look Beyond the Obvious

Presented at CVPR 2025, COSMOS shows how smarter training helps VLMs learn from details and context, improving AI understanding without larger models.

05.02.2026

Daniel Rückert and Fabian Theis Awarded Google.org AI for Science Grant

Daniel Rueckert and Fabian Theis receive Google.org AI funding to develop multiscale AI models for biomedical disease simulation.