25

Jan

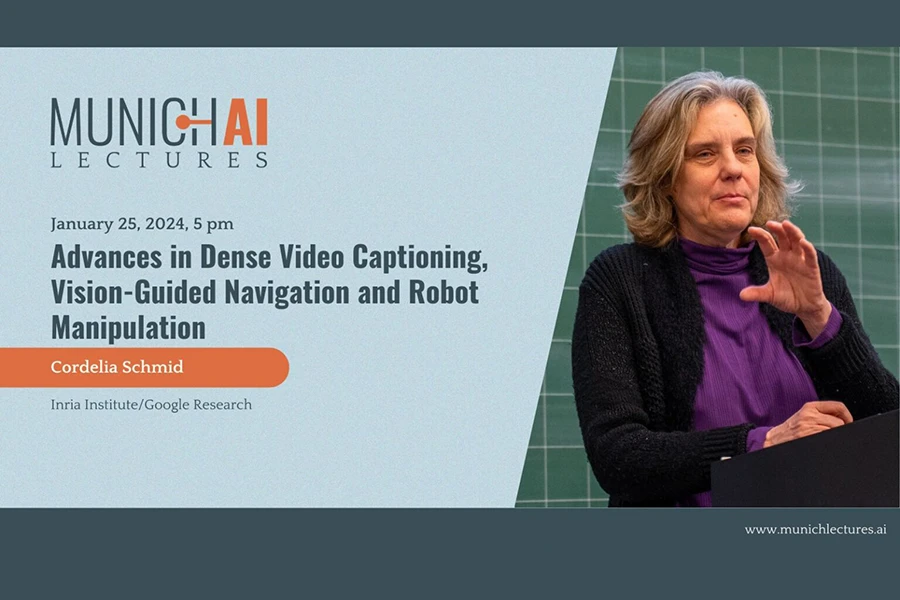

Munich AI Lectures

Advances in Dense Video Captioning, Vision-Guided Navigation and Robot Manipulation

Cordelia Schmid, Inria Institute / Google Research

25.01.2024

5:00 pm - 7:00 pm

TUM Campus Munich, Room 0790, Arcisstraße 21, 80333 München

On behalf of our partners at the Bavarian AI network baiosphere, the MCML cordially invites you to the Munich AI Lectures.

Cordelia Schmid is a pioneer in AI research. She invented procedures in the field of image recognition that enabled computers to semantically interpret image and video content. Her computer vision algorithms are key for the development of robotic assistants that can, in the future, recognize their surroundings and respond to spoken commands. Her work has been honored with important awards, including the Körber Prize, endowed with one million euros, her most recent achievement.

In this talk, she presents Vid2Seq, a model for dense video captioning that predicts temporal boundaries and textual descriptions from video and speech, and a retrieval-augmented visual language model that achieves state-of-the-art results in video question answering and image captioning. She also introduces the History Aware Multimodal Transformer (HAMT) for vision-guided navigation and robot manipulation, demonstrating its superior performance on benchmarks and in real-world applications with the Tiago robot and UR5 arm.

Organized by:

baiosphere

Bavarian Academy of Science and Humanities

Helmholtz Munich

LMU Munich

TUM

AI-HUB LMU

ELLIS Munich Unit

Konrad Zuse School of Excellence in Reliable AI

MCML

Munich Data Science Institute TUM

Munich Institute of Robotics and Machine Intelligence TUM