16.10.2025

SIC: Making AI Image Classification Understandable

MCML Research Insight - With Tom Nuno Wolf, Emre Kavak, Fabian Bongratz, and Christian Wachinger

Deep learning models are emerging more and more in everyday life, going as far as assisting clinicians in their diagnosis. However, their black box nature prevents understanding errors and decision-making, which arguably are as important as high accuracy in decision-critical tasks. Previous research typically focused on either designing models to intuitively reason by example or on providing theoretically grounded pixel-level and rather unintuitive explanations.

Successful human-AI collaboration in medicine requires trust and clarity. To replace confusing AI tools that increase clinicians’ cognitive load, MCML Junior members Tom Nuno Wolf, Emre Kavak, Fabian Bongratz, and MCML PI Christian Wachinger created SIC for their collaborators at TUM Klinikum Rechts der Isar. SIC is a fully transparent classifier built to make AI-assisted image classification both intuitive and provably reliable.

«Currently, clinicians are severely overworked. Hence, AI-assisting tools must reduce the workload rather than introducing additional cognitive load.»

Tom Nuno Wolf et al.

MCML Junior Members

The Best of Both Worlds: Combining Intuition With Rigor

Imagine a radiologist identifying a condition. They instinctively compare the scan to thousands of past cases they’ve seen, a process known as case-based reasoning.

SIC leverages the same intuition and integrates a similarity-based classification mechanism and B-cos neural networks, which provide faithful, pixel-level contribution maps. First, SIC learns a set of class-representative latent vectors to act as “textbook” examples (Support Samples). A test sample is classified by computing and summing similarity scores of its latent vector and the latent vectors of the support samples.

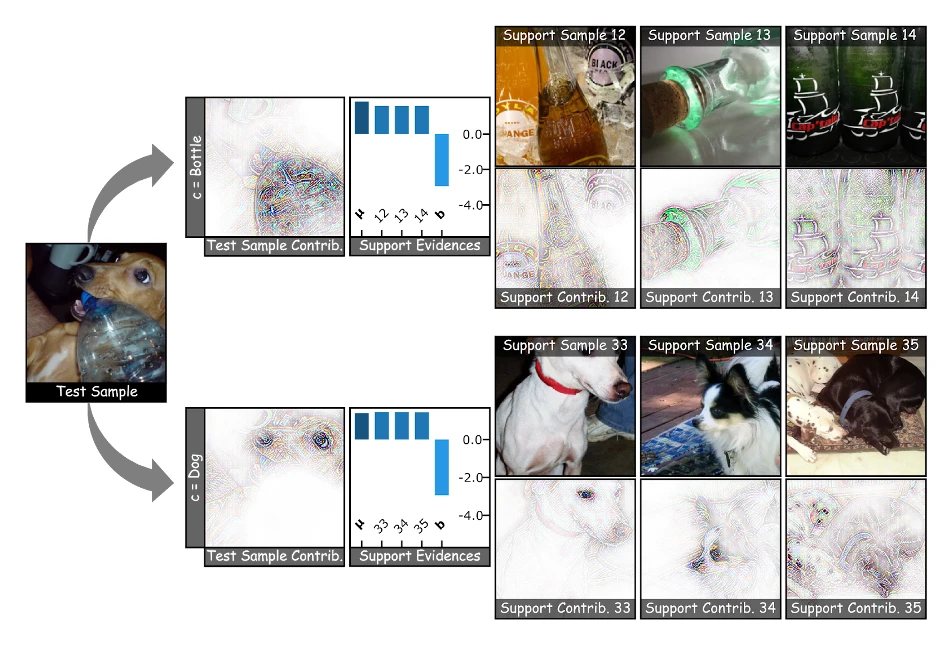

As shown in Figure 1, this provides multifaceted explanations that include the predicted class’s support samples and contribution maps, their numerical evidence, and the test sample’s contribution maps.

©Tom Nuno Wolf et al.

Figure 1: The multi-faceted explanation provided by SIC. For a given test image, SIC provides a set of learned Support Samples for each class. The Contribution Maps are generated via the B-cos encoder, faithfully highlighting the pixels that contribute to the similarity score between the test sample and the latent vectors of the support samples. The Evidence score quantifies this similarity, showing the influence of each Support Sample on the final classification. This allows a user to interrogate the model's decision by examining which Support Samples were most influential and what specific image features drove that influence.

«In addition to reducing cognitive load, we believe that heuristical explanations should be abstained from in the medical domain, as the outcome of false information is potentially life-threatening. We balanced these opposed interests in our work, which we are enthusiastic to evaluate in a medical user study next.»

Tom Nuno Wolf et al.

MCML Junior Members

Findings and Implications for Medical Image Analysis

It is often argued that interpretability comes at the cost of model performance. However, researchers working in the domain have continuously provided evidence that may be a misconception. The authors showed that SIC achieves comparable performance across a number of tasks, ranging from fine-grained to multi-label to medical classification. Moreover, the theoretical evaluation shows that the explanations satisfy established axioms, which manifest in their empirical evaluation with the synthetic FunnyBirds framework. These results and findings are what the authors were looking for in interpretable methods for deep learning - a transparent classifier providing theoretically grounded and easily accessible explanations for deployment in clinical settings.

Interested in Exploring Further?

Check out the code and the full paper accepted at the A*-conference ICCV 2025, one of the most prestigious conferences in the field of computer vision.

SIC: Similarity-Based Interpretable Image Classification with Neural Networks.

ICCV 2025 - IEEE/CVF International Conference on Computer Vision. Honolulu, Hawai’i, Oct 19-23, 2025. To be published. Preprint available. URL GitHub

Share Your Research!

Get in touch with us!

Are you an MCML Junior Member and interested in showcasing your research on our blog?

We’re happy to feature your work—get in touch with us to present your paper.

Related

19.02.2026

COSMOS – Teaching Vision-Language Models to Look Beyond the Obvious

Presented at CVPR 2025, COSMOS shows how smarter training helps VLMs learn from details and context, improving AI understanding without larger models.

05.02.2026

Needle in a Haystack: Finding Exact Moments in Long Videos

ECCV 2024 research introduces RGNet, an AI model that finds exact moments in long videos using unified retrieval and grounding.

04.02.2026

Benjamin Busam Leads Design of Bavarian Earth Observation Satellite Network “CuBy”

Benjamin Busam leads the scientific design of the “CuBy” satellite network, delivering AI-ready Earth observation data for Bavaria.