07.08.2025

Precise and Subject-Specific Attribute Control in AI Image Generation

MCML Research Insight - With Felix Krause, Vincent Tao Hu, and Björn Ommer

Text-to-image (T2I) models like Stable Diffusion have become masters at turning prompts like “a happy man and a red car” into vivid, detailed images. But what if you want the man to look just a little older, or the car to appear slightly more luxurious without changing anything else? Until now, that level of subtle, subject-specific control was surprisingly hard.

A new method led by first-author Stefan Andreas Baumann, developed in collaboration with MCML Junior Member Felix Krause, co-authors Michael Neumayr, Nick Stracke, and Melvin Sevi, as well as MCML Junior Member Vincent Tao Hu, and MCML PI Björn Ommer changes this by giving us smooth, precise dials for individual attributes, like age, mood, or color, applied to specific subjects in an image.

«Currently, a fundamental gap exists: no method provides fine-grained modulation and subject-specific localization simultaneously.»

Stefan Andreas Baumann et al.

The Insight: Words as Vectors You Can Gently Push

The models rely on CLIP, which encoder turns each word in a prompt into a vector embedding - a numerical representation of meaning in a high-dimensional embedding space. The authors discovered that within this space, you can identify semantic directions corresponding to attributes like older, happier, or more expensive.

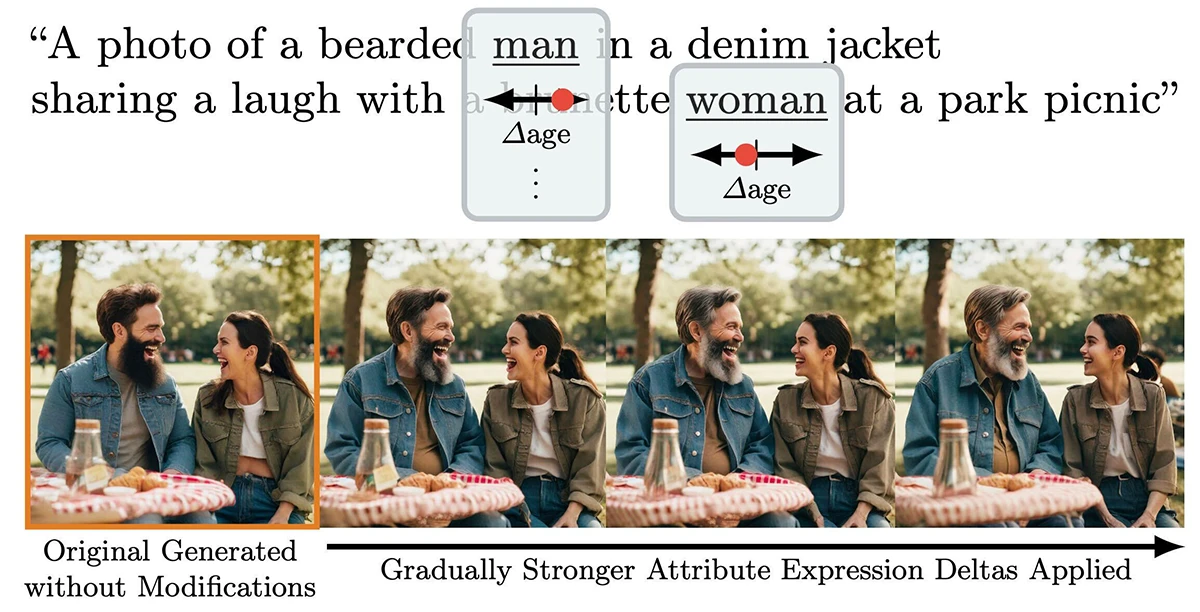

Want to make just the man older, not the woman? You can shift the embedding of the word “man” slightly along the “age” direction in the embedding space. The result: only the changes in the generated image - smoothly and precisely (see Figure 1).

©Baumann et al.

Figure 1: The authors augment the prompt input of image generation models with fine-grained control of attribute expression in generated images (unmodified images are marked in green) in a subject-specific manner without additional cost during generation. Previous methods only allow either fine-grained expression control or fine-grained localization when starting from the image generated from a basic prompt.

Two Ways to Find These Directions

- Semantic Prompt Differences: The team compares prompts like “man” vs “old man” to find the direction that changes the embedding with no training required.

- Learning from the Model Itself: For more robust control, they generate images using slightly altered prompts, look at how the model’s internal noise changes, and backtrack to find the best direction that causes just that effect - like reverse-engineering the model’s thought process.

«Since we only modify the tokenwise CLIP text embedding along pre-identified directions, we enable more fine-grained manipulation at no additional cost in the generation process.»

Stefan Andreas Baumann et al.

Why It’s a Big Deal

This method doesn’t need to retrain or modify the model at all. It plugs right into existing T2I systems, adds zero overhead during generation, and works even on real photos.

It gives creators intuitive, fine-grained control over how things appear and not just what appears. That means more expressive storytelling, more precise edits, and better alignment with human intent.

Interested in Exploring Further?

Check out the full paper presented at the prestigious A* conference CVPR 2025 - IEEE/CVF Conference on Computer Vision and Pattern Recognition, one of the highest-ranked AI/ML conferences.

Continuous, Subject-Specific Attribute Control in T2I Models by Identifying Semantic Directions.

CVPR 2025 - IEEE/CVF Conference on Computer Vision and Pattern Recognition. Nashville, TN, USA, Jun 11-15, 2025. DOI GitHub

Explore an overview of the method, visual examples, and detailed explanations at the project website or try the method interactively in your browser.

Share Your Research!

Get in touch with us!

Are you an MCML Junior Member and interested in showcasing your research on our blog?

We’re happy to feature your work—get in touch with us to present your paper.

Related

24.02.2026

Cosmology: Measuring the Expansion of the Universe With Cosmic Fireworks

Daniel Gruen leads LMU’s campaign on rare SN Winny to refine the Hubble constant and address the Hubble tension in cosmology.

19.02.2026

COSMOS – Teaching Vision-Language Models to Look Beyond the Obvious

Presented at CVPR 2025, COSMOS shows how smarter training helps VLMs learn from details and context, improving AI understanding without larger models.

05.02.2026

Daniel Rückert and Fabian Theis Awarded Google.org AI for Science Grant

Daniel Rueckert and Fabian Theis receive Google.org AI funding to develop multiscale AI models for biomedical disease simulation.