12.06.2025

Why Causal Reasoning Is Crucial for Reliable AI Decisions

MCML Research Insight - With Christoph Kern, Unai Fischer-Abaigar, Jonas Schweisthal, Dennis Frauen, Stefan Feuerriegel and Frauke Kreuter

As algorithms increasingly make decisions that impact our lives, from managing city traffic to recommending hospital treatments, one question becomes urgent: Can we trust them?

«Causal reasoning offers a powerful but also necessary foundation for improving the safety and reliability of ADM»

Christoph Kern et al.

MCML Associate

In a recent Comment published in Nature Computational Science, our Associate Christoph Kern and our Junior Members Unai Fischer-Abaigar, Jonas Schweisthal, and Dennis Frauen argue alongside our PIs Stefan Feuerriegel and Frauke Kreuter, and collaborators Rayid Ghani and Mihaela van der Schaar, that for algorithmic decision-making systems (ADMs) to be reliable, they must be grounded in causal reasoning. The reason is simple: ADM systems don’t just predict outcomes, they change them. If we want our models to be meaningful in the real world, they must understand and model cause-and-effect relationships.

That’s because every decision, whether it’s selecting a treatment, adjusting traffic lights, or targeting social policies, actively influences the outcome. This makes decision-making fundamentally different from passive prediction. Crucially, we can never observe the outcome of untaken decisions, meaning we must reason about counterfactuals - what would have happened if we had chosen differently?

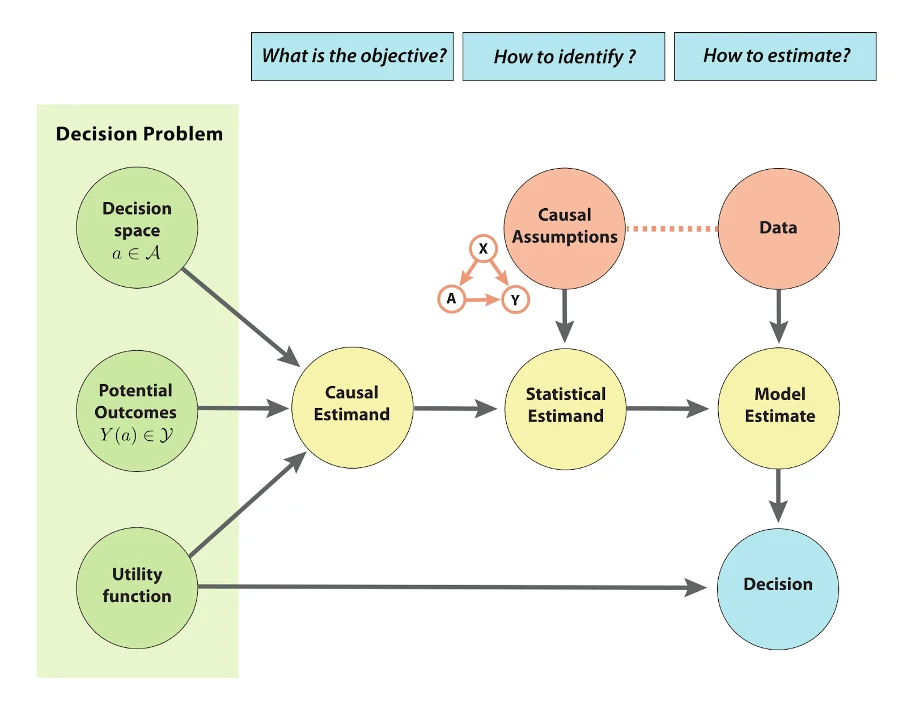

To make such reasoning valid, explicit causal assumptions are necessary. These assumptions allow us to link the causal estimand (what we ultimately want to know) to the statistical estimand (what we can actually estimate from data). Without these links, even the most accurate-looking model can be misleading.

The authors highlight two key challenges here:

- Identifiability: Can the causal estimand (the true effect of a decision) be expressed using observable data?

- Estimatability: Can we compute the statistical estimate reliably, given finite data?

To clarify how the decision problem maps to estimable quantities, given causal assumptions and observable data , the authors propose the following diagram:

©Kern et al.

First published in Nature Computational Science 5, pages 356–360 (2025) by Springer Nature.

This figure starts with the decision-making objective and the available interventions/ treatments, and illustrates how we then connect these decisions to quantities that can be estimated from data: first by defining the causal estimand (the effect we want to know), then linking it to the statistical estimand (what we can estimate from data) and finally producing the model estimate, the result the algorithm computes. This path only works if we make our causal assumptions explicit. The figure is a powerful reminder: without a clear causal framework, data-driven models can produce misleading or unreliable decisions.

The Comment further tackles practical issues such as distribution shifts, uncertainty, performativity and benchmarking, exploring how algorithmic decisions can shape future data and outcomes.

Rather than an optional feature, causal reasoning is a core requirement for creating ADM systems that earn our trust and meet real-world standards.

Read the full comment, published in Nature Computational Science, to gain an in-depth understanding of the future of reliable algorithmic decision-making, and to learn why causality must lie at its core:

Algorithms for reliable decision-making need causal reasoning.

Nature Computational Science 5. May. 2025. DOI

Share Your Research!

Get in touch with us!

Are you an MCML Junior Member and interested in showcasing your research on our blog?

We’re happy to feature your work—get in touch with us to present your paper.

Related

24.02.2026

Cosmology: Measuring the Expansion of the Universe With Cosmic Fireworks

Daniel Gruen leads LMU’s campaign on rare SN Winny to refine the Hubble constant and address the Hubble tension in cosmology.

19.02.2026

COSMOS – Teaching Vision-Language Models to Look Beyond the Obvious

Presented at CVPR 2025, COSMOS shows how smarter training helps VLMs learn from details and context, improving AI understanding without larger models.

13.02.2026

Digdeep Podcast: Will School Still Work When AI Becomes the New Classmate?

In the new episode of #digdeep Jochen Kuhn and Florian Karsten discuss AI at schools.